Microservices has taken the application development industry by a storm. It has left a huge impact on the organizations by bringing many major benefits. Working on microservices is more of an art than a science. Application developers usually find it hard to get a catch on what’s and how’s of a well-designed microservice architecture.

We have mentioned microservices in many of our previous articles. This time putting forward the comprehensive guide for microservices. This guide will act as a helping hand for those who want to know this container-based architecture in detail.

Philosophy behind Microservices - Monoliths and their drawbacks

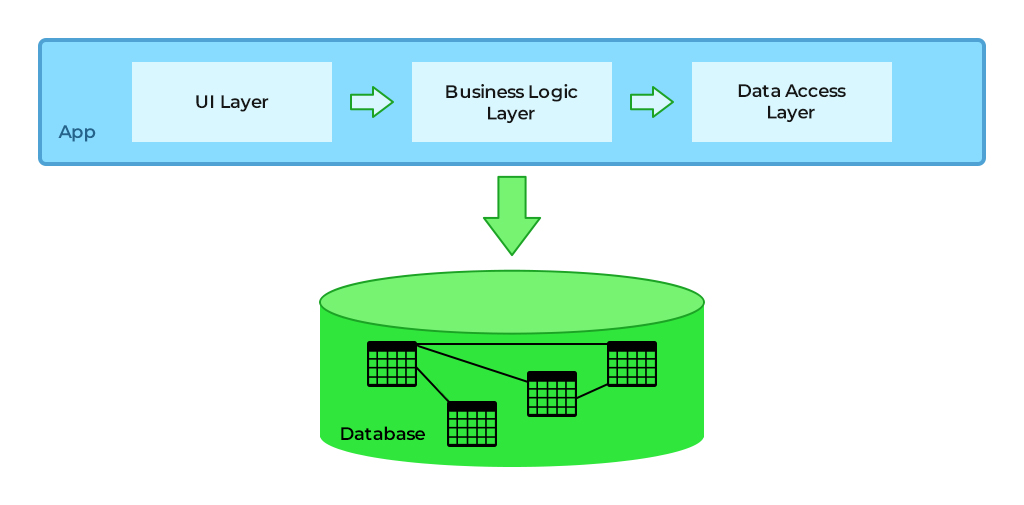

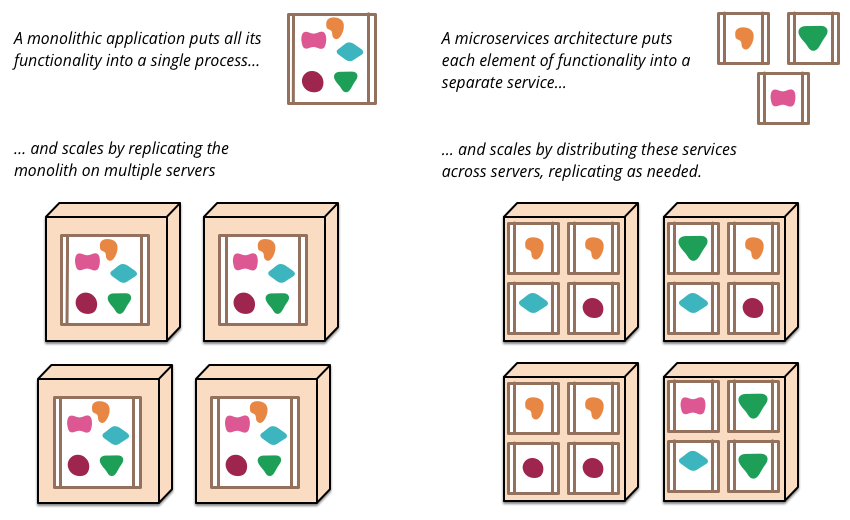

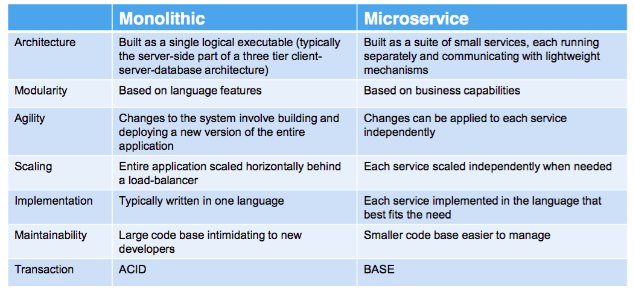

A monolithic application is a single deployable unit encompassing different components that are encapsulated to form a single workable platform. A database, client-side user interface layer, and server-side applications are the components of a monolithic application. Everyone is aware of Java programming language which comes as a standalone package and takes various formats EAR, WAR, JAR that are deployed as a single unit on the application server.

The following figure shows the basic structure of a monolithic application.

Basically, object-oriented principles are used for building monolithic applications that simplify the development, debugging, testing, and deployment processes. This works well if the applications are in their initial stages and the structure is more simplified. As the size of the application increases, class hierarchies, and interdependencies between components increases resulting in a complex application.

The increasing complexity makes monolithic applications an unfavorable choice for the cloud environment, the following points support this.

- Fault isolation is difficult: As mentioned earlier, being a single deployable unit there is no physical separation between the different functional areas (for example, a single feature is dependent on the functionality of another feature) of a monolithic system. Thus, no single release will guarantee that it will only affect the area they are targeted for. Thus, unintended side-effects are always a possibility in the case of a monolithic application.

- Expansion requires more resources: Even for the addition of a small feature or functionality, a monolithic application requires few extra resources. In most of the cases, expansion or scaling of a monolithic application is done by deploying multiple instances of the entire application at once. This results in the overall use of memory and computing resources. Furthermore, the addition of many instances makes things worse and also cause database locking issues.

- Deployment eats a lot of time: With the increasing complexity, the development and QA cycles for a monolithic application requires more time than usual. Also, any frequent changes at the time of deployment are not suggested for the monolithic applications as it requires an application rebuild, complete regression testing, and deployment of the entire monolithic application again. This whole process consumes a lot of time and also is quite complicated to take forward.

- Using the same technology over and over: The tendency of a monolithic application to refer to a single technology stack poses many hurdles. The layers of a monolithic application are tightly coupled in-process calls. In order to keep the information exchange streamlined, the same technology stack is used, thus not able to leverage the benefits from the new and existing technology stack.

For the purpose of addressing the issues of the monolithic applications, Microservices emerged as a prime upgrade to enhance the return on investment for the companies moving to the cloud.

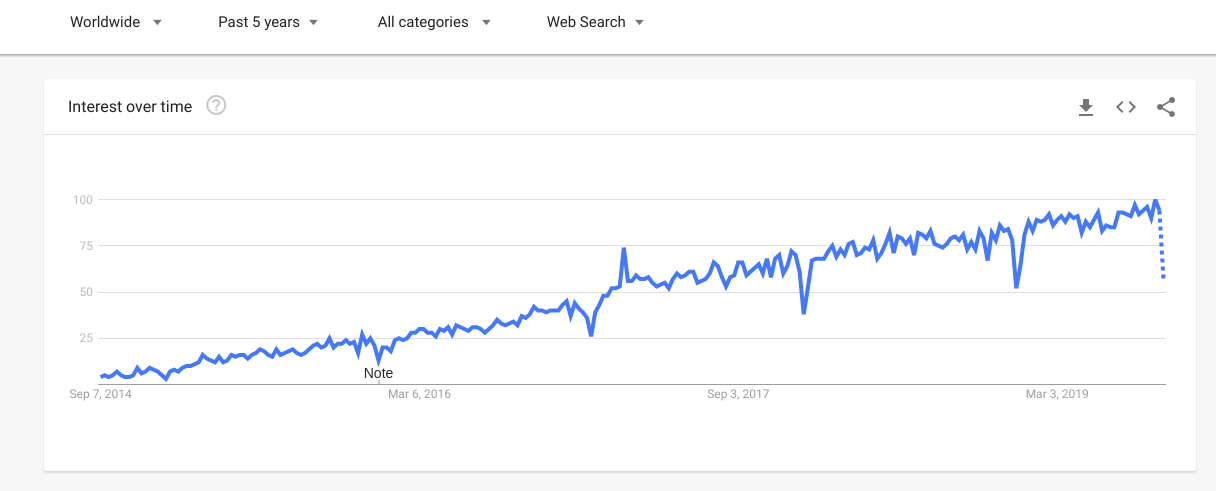

Before delving more deeply into the details, what, how and why, of the microservices, let’s have a look at the rising popularity of microservices in the past 5 years from the below Google Trends graph.

What are Microservices?

Microservices, the buzzword of the modern software development architecture, has taken the place above Agile, DevOps, and RESTful services. Martin Fowler describes Microservices as an approach to develop a single application as the suites of small deployable services.

Each service runs in its own set of processes and communicates through numerous lightweight protocols (HTTP resource API). The services are built around an organization, around business capabilities and are deployed using a piece of fully automated deployment machinery. Additionally, there occurs a decentralized control and management of services that are written in different programming languages and use distinct data storage technologies.

Dr. Peter Rogers used the term ‘micro web services’ during a conference on cloud computing (2005). Before moving any further, let’s have a look at the series of events that shaped the early software development patterns and resulted in the creation of microservices.

The Evolution of Microservices

In order to understand the gradual rise of a microservice architecture, it is imperative to step back in the timeline and look into how and where it all started. The below-written series of events has been compiled by IBM and we have bifurcated them on the basis of the accompanying observations.

Observation 1: Everything that is distributed does not mean that it should be distributed.

Early 1980 saw the advent of Remote Procedure Calls (RPC) by Sun Microsystems that were based on ONC RPC (Open Network Computing Remote Procedure Call) and on the principles of DCE (Distributed Computing Environment, 1988) and CORBA (Common Object Request Broker Architecture, 1991). The fundamental of these technologies was to make remote calls transparent for the developers. The large machine-crossing systems were built to avoid processing and memory expansion issues while invoking local or remote RPCs.

Gradually, the local space addresses became larger with the improving processors. An important observation came into perspective with this large set of DCE and CORBA implementation, that is, if something can be distributed it doesn’t mean that it should be distributed. Martin Fowler later described this as microservices that should be organized around business capabilities.

As the large memory spaces became a routine thing, system performances were adversely affected by the poor methods distribution. Earlier the small memory space led to many chatty interfaces. But the systems having large memory spaces distribution advantages, surpassed the networking overheads.

Observation 2: The collapse of a local distributed call.

For the purpose of addressing the observation on memory spaces, the first facade pattern came into existence. It was based on the Session Facade approach. The major objective of this pattern was to produce a structured interface so as to make the information or data exchange more proper. The pattern was applied in distributed systems and on the rough interfaces of entire subsystems, thus exposing only those which are available for distribution. The entire idea of a Facade pattern defines a specific external API for a system or subsystem that should be business-driven.

An API is an abbreviation for Applications Programming Interface is a framework that facilitates conversations between two applications. APIs allow developers to either access applications data or use its functionality. Technically speaking, an API sends data by means of HTTP requests. The textual response is returned in the JSON format. REST, SOAP, GraphQL, gRPC are the few API design styles. OpenAPI, RAML, or AsyncAPI are the other specification formats that are used to define API interactions in human and machine-readable formats.

The first Session facades were implemented with Enterprise JavaBeans (EJBs) that worked fine if a user was working on Java but complications emerged for other languages as debugging became cumbersome. This lack of compatibility led to the emergence of a new approach called Service Oriented Architecture (SOA), originally Simple Object Access Protocol (SOAP). A service-oriented architecture can be defined as a reusable and synchronously communicating services and APIs. These facilitate the paced application development process and easy data incorporation from other systems.

SOAP was all about object methods invocation over HTTP and facilitated logging and debugging of text-based networking calls. SOAP promoted heterogeneous interoperability but failed in handling methods other than simple method invocation like exception handling, transaction support, security, and digital signatures. This brought new observation into the picture, which is, trying to look at a distributed system as the local system has always been a dead-end. Martin Fowler later stated it as, smart endpoints and dumb pipes while describing the microservices architecture.

Observation 3: Self-contained runtime and environments.

Gradually the procedural, layered concepts of SOAP and the WS-* standards place were acquired by Representational State Transfer (REST). REST focused on using the HTTP verbs, as they were specified to create, read, delete, and update semantics. Along with this, it defined a way to specify unique entity names called the Uniform Resource Identifier (URI).

The same time saw the rejection of another legacy of the Java Platform, Java Enterprise Edition (JEE) and SOA. At the time of its introduction, JEE led numerous corporations to embrace the idea of using an application server as a host for a number of different applications. A single operations group controlled, monitored, and maintained a group of identical application servers, usually from Oracle or IBM to perform the deployment of different departmental applications onto that group, reducing the overall operations cost.

The application developers struggled to work with large-sized development and test environments. Owing to the fact that the environments were difficult to create and required operations teams for their functioning. Inconsistencies had been seen between application server versions, patch levels, application data, and software installations between environments. The open-source application servers (Tomcat or Glassfish) were favored by the developers as they were smaller in size and lightweight application platforms.

Simultaneously, the complexity of the JEE worked in favor of the Spring platform as techniques like Inversion of Control and Dependency Injection became common. The development teams found out that they were on an advantageous side in having their own independent runtime environments which led to decentralized governance and data management. The series of events thus happened led to a strong foundation for the microservices adoption by the enterprises.

How the Monolithic and Microservices Architectures are Different

Microservices Features

#1 Componentization Improves Scalability

With microservices, the applications are built by breaking services into separate components. This leads to the smooth alterations, development, and deployment of a service on its own. Also, the least dependency state of the microservices comes as a boon for the developers as modifications and redeployments or scaling can be done on the specific parts of the application rather than on the entire code. Thus, the performance and availability of business-critical services can be enhanced by deploying them into multiple servers without impacting the performance of other services.

#2 Logical Functional Boundaries and Enhanced Resilience

As mentioned earlier in microservices, the entire application is decentralized and decoupled into services. This sets the boundaries between services and also a level of modularity so that developers have an idea of when and where the change has happened. Also, unlike monolithic architecture, any tweak in the functionality of service will not affect the other parts of the application, and even if several parts of the system breakdown, will go unnoticed by the users.

#3 Fail-Safe with Easier Debugging and Testing

Applications designed using microservices are smart enough to deal with failures. If a single service fails among various interacting services, then the failed service graciously gets out of the way.

Furthermore, failure can be detected with the continuous monitoring of the microservices. With the continuous delivery and testing process, the distribution of error-free applications scales up.

#4 Improved ROI and Reduced TCO with Resource Optimization

In microservices, multiple teams work on independent services facilitating quick deployment of the application. A continuous delivery model is followed enabling developers, operations, and testing teams to work simultaneously on a single service. The development time gets reduced with a majority of code reuse. Also testing and debugging of an application becomes easy and instant.

Furthermore, expensive machines or systems are not needed for the operation of these decoupled services, fundamental x86 machines do the work. This resource optimization and continuous delivery and deployment enhance the efficiency of microservices reducing the infrastructure costs and downtime, eventually leading to the delivery of the application into the market at a fast pace.

#5 Flexible Tool Selection

Dependencies on a single vendor are not the case for microservices. Instead, there is a flexibility to use a tool as per the tasks. Each service is free to use its own language framework, or ancillary services while still being in communication with the other services in the application.

How Communication is Carried Out in Microservices

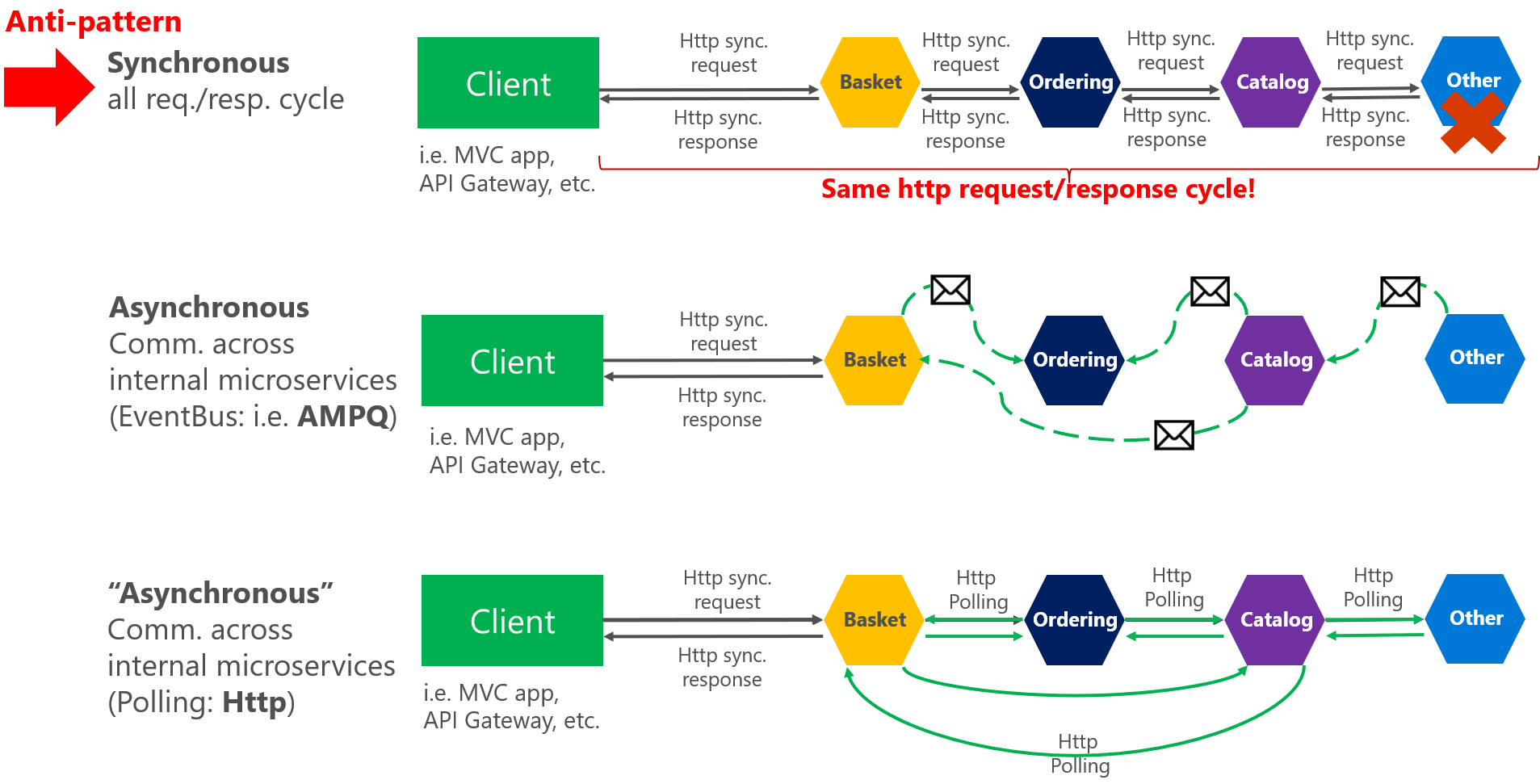

The basic idea of inter-service communication is, two microservices communicating with each other either through HTTP protocol or asynchronous message patterns. The two types of inter-service communication in microservices are described below:

- Synchronous Communication- Two services communicate with each other through a rest endpoint using an HTTP or HTTPs protocol. In synchronous communication, the calling service waits until the caller service responds.

- Asynchronous Communication- The communication is carried through asynchronous messaging. In asynchronous messaging, the calling service will not have to wait for the response from the caller service. First, a response to a user is returned and then the remaining requests are processed. Apache Kafka, Apache ActiveMQ is used for performing asynchronous communication in microservices.

Considerations for Microservices Architecture Building

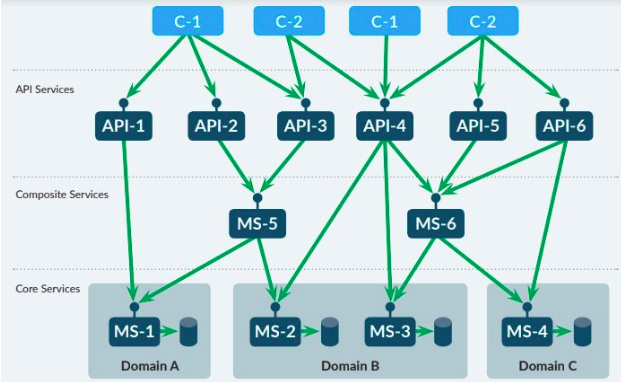

At the very first, a blueprint or structure is needed for the successful building of a microservices architecture. For instance, in order to create a structure based on domains, it can be divided into the following elements or verticals:

- Central Services: It applies the rules for the business and the other logics. Also, business data consistency is handled by a central service.

- Composite Services: For the purpose of performing a common or similar task and any type of information accumulation from different central services, composite services carry the organization procedure of the central services.

- API Services: Allowing third parties to develop creative applications that will utilize the primary functionality in the system landscape.

Along with the structure, a target architecture needs to be defined before scaling the microservices. The reason is to prevent the disruption of the IT landscape leading to its underperformance.

Things to Consider while Switching to Microservices

The transition from monoliths to microservices does not happen fortnight. Adrian Cockcroft, a microservices evangelist and known for the introduction of microservices at Netflix, has listed following best practices for designing and implementation of microservices architecture inside an organization.

- Focus on Enhancing Business Competency: While working on microservices, it is recommended for the teams to have knowledge of diverse requirements for specific business capabilities. For example, approval of the order, shipping so as to manage the delivery of a product. Additionally, services should be developed as independent products with good documentation, each one responsible for a single business capability.

- Similar Maturity Level for all Codes: All the code that is written in microservice should have the same level of maturity and stability. If there is a situation of the addition or rewriting of code for a microservice, the most favorable approach is to leave the existing microservice and create a new microservice for the new or altered code. This does the iterative release and testing of a new code until it is error-free and efficient.

- No Single Data Store: The data store should be selected as per the requirements from each microservices team. A single database source for each microservice will bring few undue risks, for example, an update on the database will get reflected in every service accessing that database despite its relevance or not.

- Container Deployment: The deployment in containers leads to the usage of a single tool that facilitates the delivery of a microservice. Docker is the most chosen container these days.

- Stateless Servers: Servers can be replaced as per the requirement or in any type of ill-functionality.

- Monitor Everything: Microservices is made up of a large number of moving sections and therefore, it is imperative to perform proper monitoring of everything under consideration such as response time notifications, service error notifications, and dashboards. Splunk and AppDynamics come as an aid in the microservices measuring process.

There is no doubt that microservices are a hot trend for the current application development generation, but it does bring a few drawbacks associated with it.

The Microservices Drawbacks

Below are listed some potential drawbacks associated with microservices.

- Increasing Complications: The development of distributed systems is complex and will gradually increase with the delaying of remote calls. Due to the independent services, each request needs to be handled carefully and also the communication between modules needs extra care. Furthermore, in order to avoid obstruction, an extra piece of code is appended by the developers. The deployment of microservices can be cumbersome and requires complete coordination among different microservices.

- Cumbersome Data Management: With the presence of multiple databases, it becomes quite unmanageable to handle transactions and management of a database store.

- Inconvenient Testing: The dependency between services needs to be confirmed before carrying out the testing of microservices, that is, robust monitoring and testing are vital.

Tools to Manage Microservices

The search for the right tools is imperative before building a microservices application. A wide variety of open-source and paid tools are available that support microservices building. But it has always been said that microservices development shines bright with the available open-source tools. Below are listed the few tools for building a microservices application:

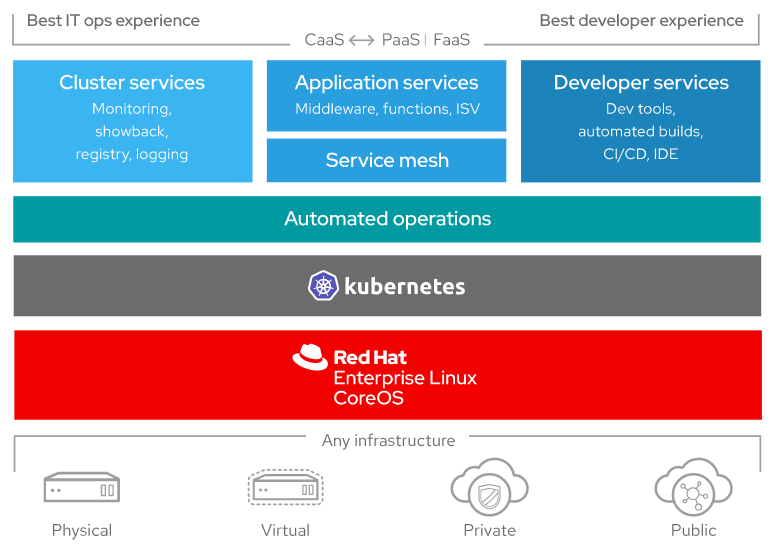

- Red Hat OpenShift – The Red Hat Openshift is a freely distributed multifaceted container application platform from Red Hat Inc. An application reinforcing Kubernetes application with Docker containers is being used widely for development, deployment, and management of applications on hybrid, cloud and within the enterprises. The below diagram shows the components of the Openshift platform.

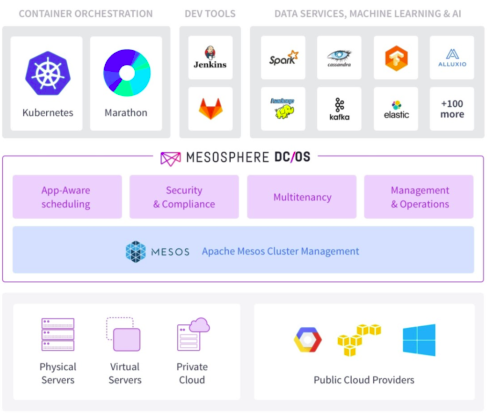

Mesosphere – Mesosphere is a distributed computer operating system for managing clusters. The container platform is built around the open-source kernel Apache Mesos and Mesosphere's DC/OS (Data Center Operating System). The robust, flexible and platform containerization platform performs intensive tasks within an enterprise. The diagram below shows the components of the Mesosphere.

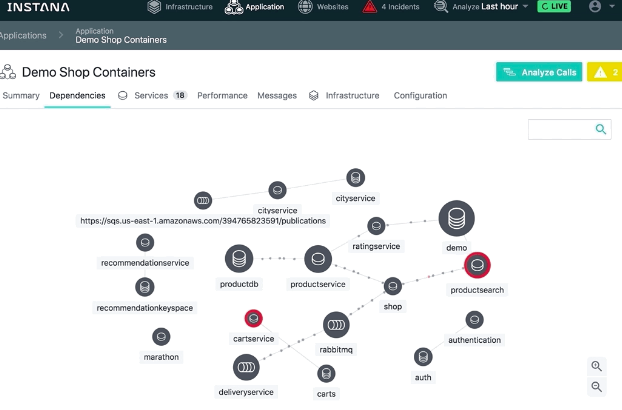

Instana – The dynamic application performance management system which performs the automatic monitoring of constantly changing modern applications. It has been designed particularly for the cloud-native stack and performs infrastructure and application performance monitoring with zero configuration effort and thus accelerating the CI/CD (continuous integration/continuous deployment) cycle. The diagram below shows an Instana dependency map for demo shop containers.

Check out the more open-source tools to manage microservices applications

The Future: Microservices are Entering Mainstream

Netflix, eBay, Twitter, PayPal, and Amazon are the few big names that have acquired a strong market presence by shifting from a monolithic architecture to a microservices.

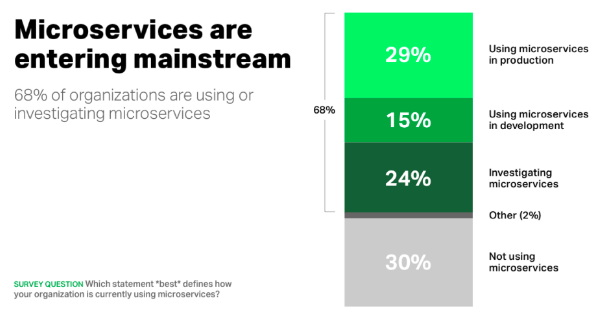

A survey (2015) with the question, ‘Which statement ‘best’ defines how your organization is currently using microservices?’ by Nginx states that near about 70% of the organizations are either using or are doing research on microservices, with nearly one third are currently using them in the production.

Service meshes, event-driven architectures, container-native security, GraphQL, and chaos engineering were the microservices trends for the year 2018. The rapid rise of microservices has brought a few new trends into the vision. Below are mentioned the 2019 predictions for microservices:

#1 Test Automation

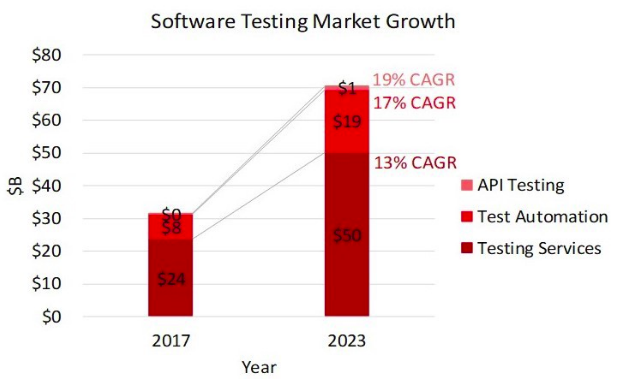

Testing is performed to check the health of an application. Businesses always aspire for the testing solutions that design runs, and report the results automatically. Along with this, testing should be smooth and should facilitate connections to CI systems, new code check in real-time, and addition of comments similar to a human engineer. Artificial Intelligence will leverage many benefits from software testing, namely, improves productivity, cost, coverage, and accuracy. The below graph shows the software testing market growth from the year 2017 to 2023.

#2 Continuous Deployment and Enhanced Productivity with Verification

With Continuous Deployment the code is automatically deployed to the production environment after it successfully passes the testing phase. A set of design practices are used in Continuous Deployment to push the code into the production environment.

Furthermore, with Continuous Verification (CV) event data from logs and APMs are collected. In order to know the features that caused success and failed deployments.

#3 Addressing Failures with Incident responses

The complex distributed architectures are often fragile. The site reliability engineers (SRE) are responsible for the availability, latency, performance, efficiency, change management, monitoring, emergency response, and capacity planning of the services. The incident response is a crucial SRE task. When a service fails, a team with distinct roles addresses and manages the aftermaths of the failures.

#4 Save Dollars with Cloud Service Expense Management (CSEM)

Cloud cost administration is one of the few challenges that affect both the engineering, IT teams, and the entire company. Cloud Service Expense Management monitors and manages the cloud-computing expenses and the cloud resources that will help companies to derive the best value for their businesses.

#5 Expansion of Machine Learning with Kubernetes

Kubernetes is gradually growing and is becoming part of the machine learning (ML) stack. Many companies are working on to standardize on Kubernetes or ML and analytical workloads.

Conclusion

Microservices are not new. They have marked their presence previously in the form of a service-oriented architectures, web services, etc. Clustered together as small independent services, which together formed an application, Microservices came into existence to overcome monolithic challenges. A structure-based approach and the right selection of tools will streamline the building of a microservice application.

How do you see it from your viewpoint? Share your views on our social media channels: Facebook, LinkedIn, and Twitter.

Subscribe

Related Blogs

Exploring Drupal's Single Directory Components: A Game-Changer for Developers

Web development thrives on efficiency and organisation, and Drupal, our favourite CMS, is here to amp that up with its…

7 Quick Steps to Create API Documentation Using Postman

If you work with API , you are likely already familiar with Postman, the beloved REST Client trusted by countless…

What is Product Engineering Life Cycle?

Imagine constructing a house without a blueprint or a set of plans. It will be challenging to estimate the cost and labor…