Microservices made a loud and clear entry into the world of IT a couple of years ago. They announced their ability to split the monolithic applications and transformed the system into independently managed components to claim the significance they enjoy today.

It didn’t take much time for organizations to jump the bandwagon of microservices with all their architectural needs.

However, this revolutionary application didn’t give us much time to lay down a foundation of principles for you. Thus, in this article, we will go back to the basics of microservices and demystify the elements and principles of its architecture.

Elements of Microservices

These five elements are a prerequisite for your microservice before it takes its place in the ecosystem.

Functionality and Flexibility

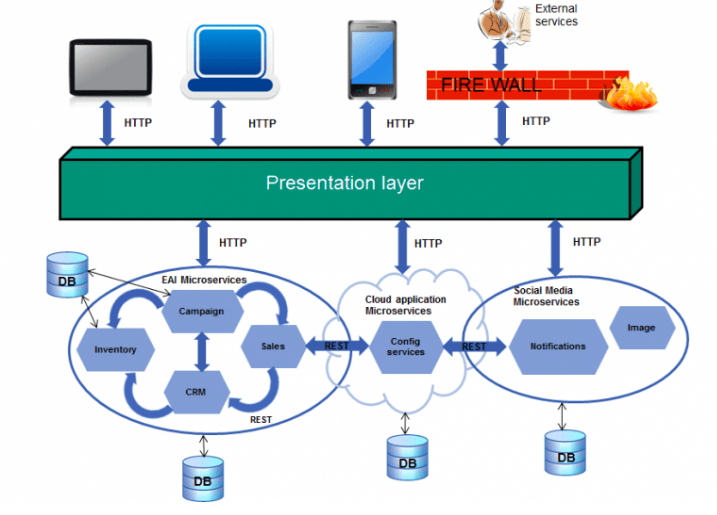

As the primary goal of microservices is to break the monotony of monolithic structures, the ease of new processes helps in approaching the systems with agility and flexibility. The shorter life cycles lead to frequent releases and incremental improvements that ultimately deliver better products. The users are able to run applications on a reduced cost yet achieve higher density by leveraging the shared infrastructure of the microservices. Focused on a single capability, each container is managed within a separate environment. This accelerates the functionality, time to market and infrastructure utilization.

API Mechanisms

For the distributed services to be connected with each other, the REST web services API is put to use. This mechanism involves API exposing the fine-grained data to the customers. Therefore, a common interface is required that commands the APIs about the functions of each service. It is of high importance for the API to be treated with care when defining the SLA between the client and the service. It becomes a necessity to automate the design, documentation, and development of the concerned API to deliver at a speed.

Data Traffic

Though the microservices are stateless there are other operations that run on a separate network requiring data services. When you choose a platform to hold these services, there are certain factors to be called in. For starters, the run time or the response time of the server during heavy traffic situations.

The support for such conditions requires management that reposts the status and coordinates the load variations. One way to handle this is via the process of auto-scaling. It is a management system that tracks service loads and adds or removes service instances as per the need.

Offloading The Data

In continuation of the data traffic, the base infrastructure also comes under the scrutiny of services. The unreliability of the infrastructure is a reality that leads to crash and failure of response to the high-load status of the services. The microservices require steady operating system for pushing out consistent results. In order to achieve this, the user-specific data can be offloaded from the service instance and shared into a storage system that’s accessible from all service instance. In case the session fails, the data can be migrated to ensure that the crash doesn’t stop the user interactions.

You can insert a shared memory-based cache system that will allow for quick data access and thus improve the performance of the application. The insertion of the cache system might complicate the architecture further for the microservices but the sheer satisfaction of data offloading is a necessity in such critical situations.

Inevitable Monitoring

With an additional offloaded data layer and traffic variations adding complexities too, monitoring becomes inevitable. For obvious reasons, traditional monitoring approaches do not comply with the environments of microservices. The scale and dynamic nature demand an ongoing resource that captures data in a central location and reflects the changes of the application in the monitoring system. It is also important to track each service instance data and capture application-created log information. This kind of real-time service monitoring gives a holistic stand of your microservices to proactively processes and deploy services that produce resilient and scalable solutions for business continuity.

The digital entertainment giant, Netflix is a prime example of optimizing the design and implementation of microservices in use. Netflix started using the open source NGINX software in 2011 as its delivery infrastructure which offers a high-quality digital experience with the ability to push millions of requests per second every day. Adrian Cockcroft, the Director of Web Engineering back then visualized the division of teams into smaller groups working on the end-to-end development of the platform for a smooth streaming experience. Cockcroft as a Cloud Architect focused on discovering and formalizing the architecture as Netflix engineers established several best practices for designing and implementing the microservices.

Key Principles

Following are the key principles to remember while designing a microservices-based enterprise application.

Implement Domain-Driven Design

For the different domains of the microservices to work in accordance with each other, the architecture provides a domain-driven design. In this strategy, the focus shifts to the core domain that minimizes any possibility of the application getting out of hand. As a team, you should list your Entities, Repositories, Value Objects, and Services that defines a bounded context of your model. This helps in setting the limits around decisions like who uses it, how they use it and where it applies within a larger application context.

Hide Away Implementation Details

The database holds a secretive value when it comes to running independent cycles within a microservice. A good contract requires to hide away the service implementation details in order to reduce the tight coupling between services and consumers. This ensures that each service gets to retain its autonomous nature. It is crucial to manage segregated control over the intent of the service and its implementation. The contract can facilitate the use of REST protocol over HTTP for this through which the internal and external details can be maintained.

The DevOps team work in sync with the microservices’ architecture.

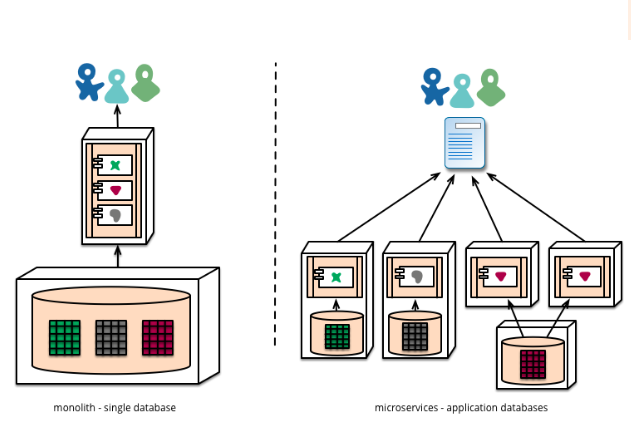

Decentralization

Another aspect of monolithic architecture is the single logical database for all applications in use. The aim of microservices, on the other hand, is to decentralize this decision making authority and give a faster pace to teams to work independently. However, there are some key questions that need to be addressed for the process of decentralization. For instance, who serves on which team, what are the tools, languages, and architectures that will be used inside each service, when do you create or retire services and so on. Addressing these allows innovation to thrive and reduces the risks that hold an impact on the system.

Failure Isolation

Building boundaries that separate services do reduce the risks of failure at large within the microservices architecture. However, it does not completely eliminate failure issues. Within the services, the risk of failing systems, network, hardware or the application isn’t isolated.

In cases like these, how do you ensure that the failure doesn’t have a domino effect and spirals into the central system?

The discipline of chaos engineering is put to work for solving this dilemma of attaining reliable distributed systems. The primary objective of chaos engineering is to inject issues that the system might encounter in the real world. For instance, a crash of an application, network failure or no availability zone. Here, the architecture is put to test whether it can handle these expected and unexpected situations and is thus prepared to be more resilient.

DevOps Culture

The DevOps teamwork in sync with the microservices’ architecture. The automation of building and deploying the processes is maintained by the DevOps system whereas the microservices divides the services for enabling it. Both practices are designed to offer greater agility and efficiency for the operations of the enterprise to run without hiccups.

Reuse

The most unique principle of microservices is their nature of reuse. Here, building a microservices architecture for a non-project specific purpose is the key. Developers can simply set new configurations and add new project’s logic along with cloning a repository to reuse the application. This improves productivity and builds a culture of sharing the work, ultimately bringing innovation to the limelight.

Conclusion

Microservices have emerged with trendsetting abilities in the IT departments across the globe.

Organizations can implement these principles of microservices when installing the operations for the ease of deployment and agility of projects.

OpenSense Labs, as a pioneer in Drupal Development, offers services to clients that work on similar principles. Drop a message at [email protected].

Also, share your thoughts on our social networks: Twitter, LinkedIn, and Facebook.

Subscribe

Related Blogs

Exploring Drupal's Single Directory Components: A Game-Changer for Developers

Web development thrives on efficiency and organisation, and Drupal, our favourite CMS, is here to amp that up with its latest…

7 Quick Steps to Create API Documentation Using Postman

If you work with API, you are likely already familiar with Postman, the beloved REST Client trusted by countless developers…

What is Product Engineering Life Cycle?

Imagine constructing a house without a blueprint or a set of plans. It will be challenging to estimate the cost and labor…