Agentic AI Dynamics: Future of Data Retrieval

In a world of ever-growing data, finding the right information at the right time can feel like searching for a needle in a haystack. That’s why we decided to build an Agentic AI internal search engine, a system that uses multiple specialized agents to retrieve and compile the most relevant answers from different data sources.

In this blog, we’ll take you on a tour of how these agents interact, how the flow of information is orchestrated, and why an Agentic AI approach can make all the difference in delivering quick, accurate results.

Before we continue with this blog, if you're interested in advanced AI services customized for your business and ethical, growth-oriented AI solutions, take a look at what we offer.

Let's explore how the Agentic AI approach functions!

Agentic AI: How the Pieces Fit Together

Agentic AI workflows are AI-driven processes in which autonomous AI agents make decisions, take actions, and handle tasks with minimal human input. These workflows leverage key characteristics of intelligent agents, such as reasoning, planning, and the use of tools, to efficiently execute complex tasks.

Traditional automation techniques, such as robotic process automation (RPA), depend on set rules and predefined patterns, making them effective for repetitive tasks that follow a consistent format. In contrast, Agentic AI workflows are more flexible, allowing them to adjust to real-time data and unexpected situations.

They tackle complex issues through a step-by-step, iterative approach, allowing AI agents to deconstruct business processes, adjust in real-time, and improve their actions over time.

In short, Agentic AI flow refers to how multiple agents collaborate, each specializing in a particular task, to produce a seamless user experience.

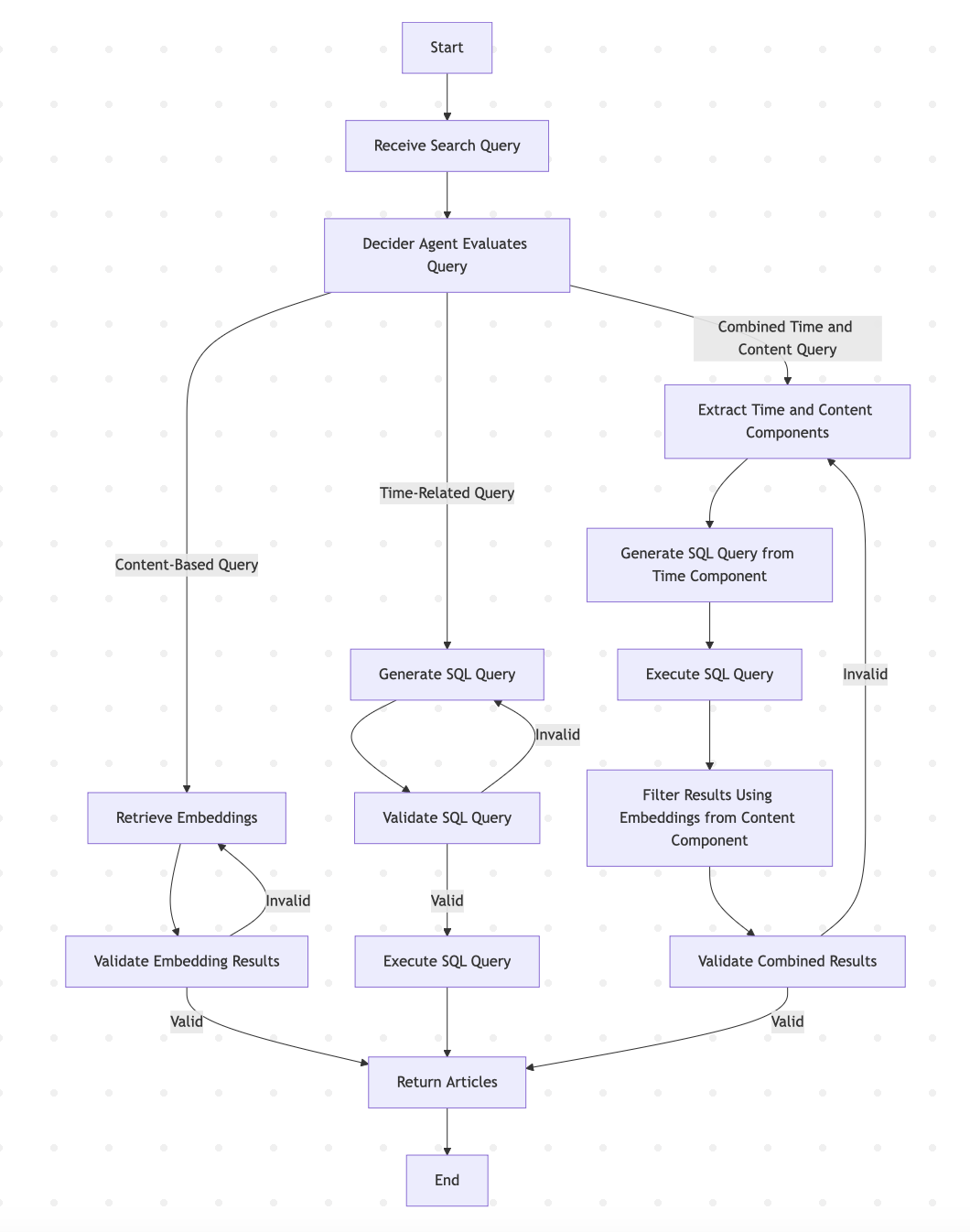

Here’s a quick snapshot of our Agengtic AI search engine flow:

At every step, the Search Coordinator ensures that the right agent is called upon, that data flows back properly, and that the final answer is neatly packaged and returned to the user.

Agentic AI: AI Agents And Their Types

In artificial intelligence (AI), a workflow is considered non-agentic if it lacks an AI agent. An AI agent is a system or program that can independently carry out tasks for a user or another system by creating its own workflow and using the tools at its disposal.

The core of Agentic AI Workflows is built on AI Agents, which are enhanced forms of large language models (LLMs). These agents are crafted to embody specific personalities, roles, or functions, each possessing its distinct traits. They go beyond standard AI abilities by not only responding but also by actively interacting with various tools and resources. This flexibility enables them to carry out numerous tasks, such as performing web searches, running code, and editing images, greatly enhancing the usefulness and scope of large language models.

Here are the AI agents that we have built in our application:

1. Search Coordinator: The Orchestrator Agent

The Search Coordinator, which functions as the orchestrator agent, is at the center of this Agentic AI system. When a user types a query, the Search Coordinator steps in:

-

Interprets the Query: It uses an LLM (Large Language Model) to parse the user’s question and figure out what type of information is being requested.

-

Decides Which Agent to Dispatch:

-

Is the query best served by a small local database?

-

A massive enterprise data warehouse?

-

An external web source?

-

Or does the answer lie in unstructured documents?

By analyzing the query’s nature, the Search Coordinator dynamically routes it to the appropriate specialized agent.

Also, Check Out:

1. Explainable AI Tools: SHAP's power in AI

2. AI Fairness: A Deep Dive Into Microsoft's Fairlearn Toolkit

3. AI Chatbot: Crafting with Precision & Personality

4. Digital Marketing Trends: AI vs Human Copywriters

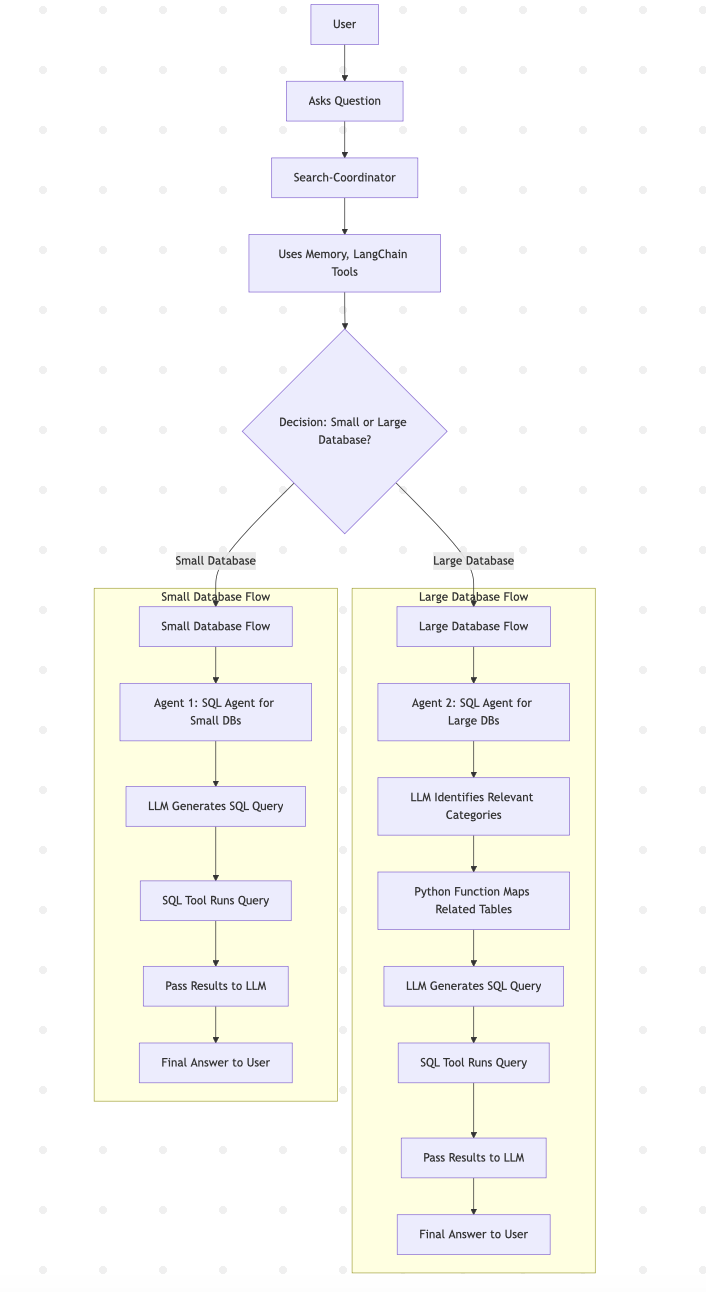

2. SQL Agents: Small vs. Large Database

Small Database Agent

When the Search Coordinator detects that a query can be satisfied by a small local database, it delegates the request to a specialized SQL Agent for Small DBs. This agent:

-

Uses the large language models to interpret the user’s query, identifying which columns or tables are relevant.

-

Generates an SQL query optimized for a small database.

-

Executes the query and returns the results.

-

Hands off the data to the large language models, which then format it into a user-friendly answer.

Large Database Agent

For more complex enterprise data, the Search Coordinator sends the query to a SQL Agent for Large DBs. This agent:

-

Classifies which categories or tables (potentially out of hundreds) might contain relevant information.

-

Uses a Python function to map those categories to the correct tables.

-

Constructs a more robust SQL query, tailored for large-scale data retrieval.

-

Runs the query, collects the results, and passes them back for final processing by the large language models.

In both scenarios, each SQL Agent is laser-focused on its domain, ensuring optimal performance and accuracy.

Example: Joe owns a small bakery in his neighborhood and uses a basic database to keep track of customer orders. One morning, he wants to know, “How many orders did John Doe place last month?” His system uses an Agentic AI Flow to handle such queries. Since his bakery's data is small and simple, the Search Coordinator quickly delegates the task to the Small Database Agent. This agent interprets the question using a language model, identifies the relevant tables (like customers and orders), creates a quick SQL query, runs it, and returns the result: “John placed 3 orders last month.” Now, imagine Joe expands and opens multiple bakery branches across the country, using a complex enterprise system with millions of records. He asks the same question. This time, the Search Coordinator detects the complexity and passes the query to the Large Database Agent. This agent classifies which types of data are needed, maps them to the right tables (which could now be hundreds), and builds a robust query to search across locations. It fetches the data and hands it back to the language model, which replies, “John placed 154 orders across all your bakery branches last month.” In both cases, the right AI agent is activated based on the data size, ensuring Joe always gets fast, accurate answers, whether his business is small or nationwide.

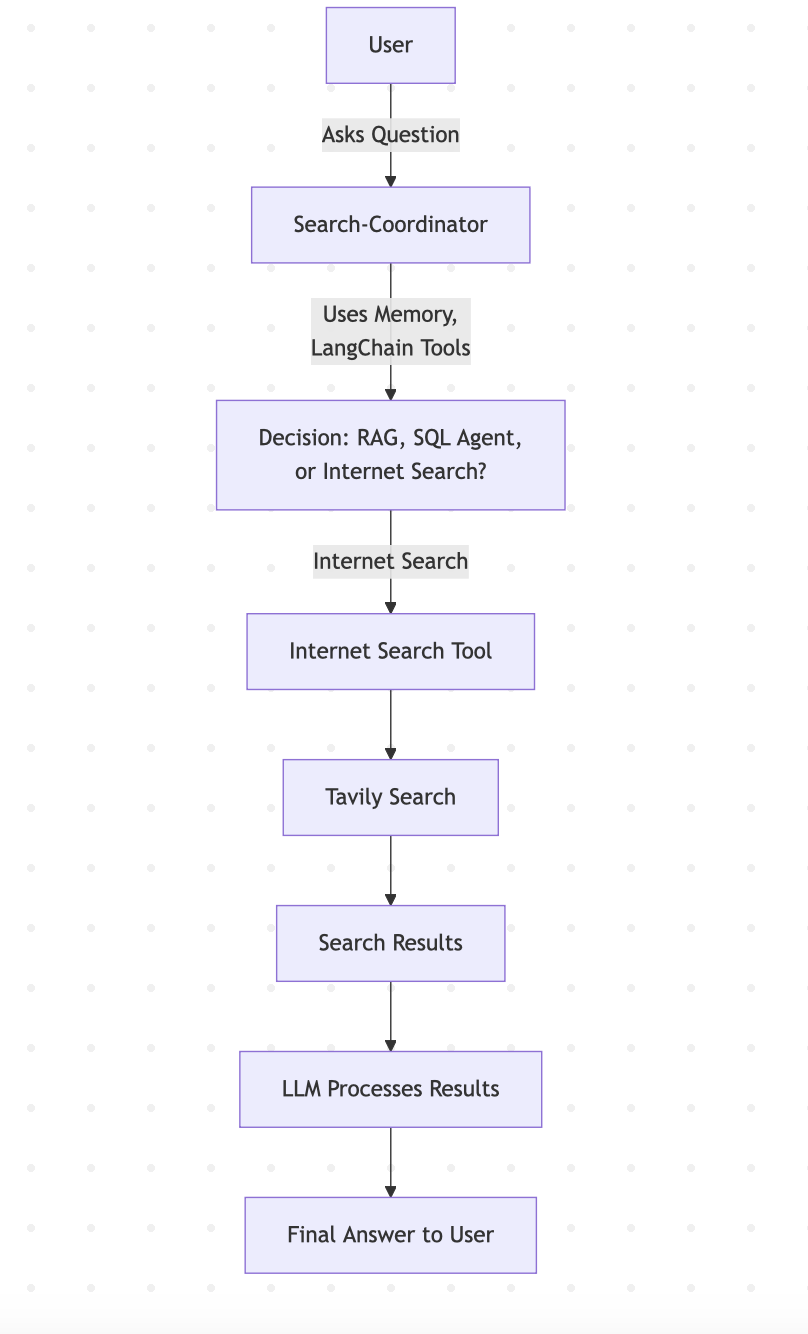

3. Web Search Agent: Tapping External Sources

Not everything lives within your internal databases. Sometimes, the best answer might be found in public data or real-time news. Enter the Web Search Agent:

-

The Search Coordinator decides external data is needed, maybe the user’s question is time-sensitive or involves topics not covered internally.

-

The query is dispatched to the Web Search Agent, which taps into external search engines (like Google or a custom API).

-

The agent fetches the results and returns them to the large language models.

-

The large language models then distill these search snippets into a concise, accurate summary.

This Agentic AI approach to external data ensures your internal search engine stays relevant, even for queries that go beyond your internal data sources.

Example: As Joe’s bakery business continues to grow, he starts expanding not just locally, but also into new cities with different trends and customer preferences. One day, Joe wonders, “What are the top dessert trends this summer in New York?” His internal database doesn’t have that kind of market insight, so the Search Coordinator realizes this is a query that goes beyond internal data. It quickly delegates the task to the Web Search Agent. This agent goes out to public sources, like Google, food blogs, and industry reports, fetches the most relevant and recent content, and passes it back. The language model then processes this information and delivers a clear, concise answer to Joe, such as: “This summer, top dessert trends in New York include mochi donuts, matcha-flavored pastries, and frozen custard sandwiches.” Thanks to the Web Search Agent, Joe can make data-informed decisions that align with market demand, even when that data lives outside his systems. This ensures his bakery stays innovative and competitive in every location he operates.

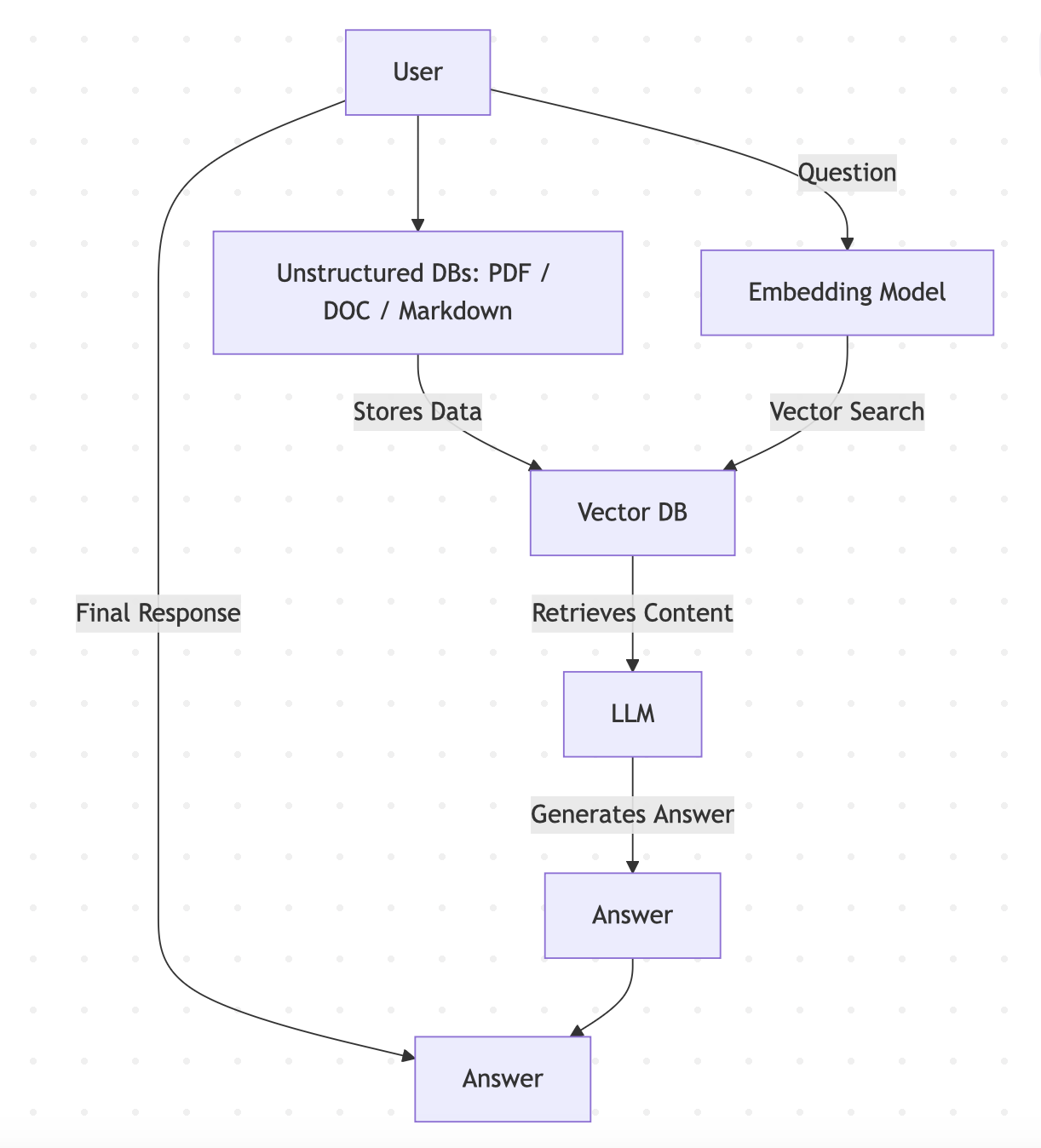

4. RAG Agent: Retrieval-Augmented Generation for Documents

Many organizations possess a large number of unstructured documents, such as PDFs, Word files, and presentations, which hold important information. This is where the RAG Agent plays a crucial role:

-

The user’s query is handed off to the RAG Agent, which uses a Vector Search in a Vector Database.

-

Each document has been converted into a vector (an embedding), as is the user’s query.

-

By comparing these vectors, the agent identifies the documents (or passages) most relevant to the query.

-

The selected text is then fed into the large language models, which synthesize it into a clear, cohesive response.

The RAG Agent unlocks insights hidden in unstructured data, making it as searchable as a well-structured database.

Example: As Joe’s bakery chain expands, he starts collecting a lot of internal documents, training manuals, supplier contracts, seasonal recipe PDFs, and presentation decks from past strategy meetings. One day, Joe wants to know, “What packaging guidelines did we finalize for the holiday cookie line last year?” This information isn’t in a database; it’s buried inside a lengthy PDF report from a team meeting months ago. The Search Coordinator recognizes this and hands off the query to the RAG (Retrieval-Augmented Generation) Agent. The RAG Agent taps into a Vector Database, where all documents have been pre-processed and converted into vector embeddings. It also turns Joe’s query into a vector and compares it with the stored ones to find the most relevant passages. Once it finds the section in the PDF where the packaging guidelines were mentioned, it sends that content to the language model. The LLM then synthesizes it into a neat answer for Joe: “The finalized packaging included recyclable red boxes with gold ribbons, featuring allergen labels and QR codes for online reorders.” Thanks to the RAG Agent, Joe can now instantly retrieve critical decisions hidden in piles of unstructured documents, turning buried knowledge into actionable insights.

Agentic AI: Memory & LangChain Tools

Before diving deeper into the agents themselves, let’s talk about two crucial supporting components:

-

Memory: This is where the system keeps track of the conversation’s context. If a user refines or updates a query, the Memory helps the Search Coordinator and the other agents remember what was previously asked.

-

LangChain Tools: LangChain is a powerful framework that makes it easier to build applications on top of large language models. It helps orchestrate prompt templates, manage conversation flows, and coordinate the different agents, ensuring that each step in the Agentic AI flow is clear and well-defined.

Agentic AI with Memory and LangChain Tools working in the background, the Search Coordinator can focus on delegating tasks to the right agents without losing track of prior context.

Before we proceed with this blog, if you're looking for tailored advanced AI services and solutions for your business, check out our offerings.

Now, let's proceed further!

Agentic AI: Putting It All Together

When you zoom out, you’ll see how each agent, SQL, small and large, Web Search, and RAG collaborates under the guidance of the Search Coordinator:

-

Search Coordinator: Interprets the query, decides on the best route.

-

Memory: Maintains context, especially for follow-up queries.

-

SQL Agents: Fetch structured data from small or large databases.

-

Web Search Agent: Gathers external information.

-

RAG Agent: Retrieves relevant passages from unstructured documents.

-

Large Language Models: Synthesize the data, turning raw information into polished answers.

This is the essence of an Agentic AI flow, a carefully choreographed system where each agent plays a distinct role, yet all work together seamlessly.

Agentic AI: Lessons Learned & Future Directions

Building an Agentic AI internal search engine has been a learning experience in modularity, context retention, and decision-making. A few highlights:

-

Modularity: Separating responsibilities across different agents keeps the system maintainable and easier to upgrade.

-

Context Preservation: The Memory component ensures that multi-step or evolving queries don’t lose context.

-

Scalable Orchestration: The Search Coordinator’s Agentic AI decision-making helps the system adapt to everything from small queries to large-scale enterprise requests.

-

Future Enhancements: We’re exploring more advanced classification techniques and even reinforcement learning to make the Agentic AI flow even smarter and more intuitive over time.

Also, Check Out:

1. AIOps: Using Artificial Intelligence in DevOps

2. Changing Businesses Using Artificial Intelligence and Drupal

3. Top 2018 Drupal Modules using Artificial Intelligence

4. Drupal Recipe Module: What Is It & How It Works?

Key Takeaways

1. On the surface, this Agentic AI internal search engine might look like a simple search box. But under the hood, there’s a network of specialized agents working in harmony.

2. An Agentic AI flow, where each agent tackles a specific type of task, we can deliver fast, accurate answers for a wide range of queries, whether they involve small databases, massive data warehouses, external websites, or unstructured documents.

3. Clarity on how an Agentic AI approach can revolutionize your internal search.

4. Memory monitors the context of the conversation. When a user modifies a query, it assists the Search Coordinator and other agents in recalling what was previously discussed.

5. LangChain assists in organizing prompt templates, overseeing conversation flows, and coordinating various agents, making sure that every stage in the Agentic AI process is clear and well-structured.

Subscribe

Related Blogs

GenAI vs LLM: What’s the Real Difference?

In recent years, artificial intelligence has gained immense popularity, particularly on social media. Picture this: You're…

Ethical AI Chatbot: Implementing the RAIL Framework at OSL

In today’s digital era, Ethical AI chatbot are transforming industries, from customer service to healthcare and human…