Do you know what are explainable AI tools?

Explainable AI tools are programs that show how an AI makes its choices. They help people understand the decision-making process of AI, which builds trust and allows for better management and adjustment of AI systems.

In this blog, we will talk about explainable AI tools such as SHAP and its methodology, which provides a robust framework for understanding how each feature influences a model's predictions.

Before we continue with this blog, if you're interested in AI services to grow your business, be sure to explore our offerings.

Let’s see... how explainable AI tools like SHAP work!

Explainable AI Tools: What is SHAP?

SHAP (SHapley Additive exPlanation) is a powerful and widely used method for explaining machine learning models. It helps interpret the output of machine learning models, especially complex ML models (like Deep Learning or ensemble models), by providing a way to understand the contribution of each feature to a model's prediction.

By utilizing Shapley Values, SHAP assigns a fair contribution score to each feature, ensuring transparency in the model decision-making. It uses Shapley values, a concept in cooperative game theory that provides a fair way to distribute payload, in our case ML model's prediction among the contributing features.

Incorporating SHAP into our workflow will enhance model interpretability, making it easier to trust. As ML models become more intricate, having a clear understanding of how features contribute to predictions is essential for improving model performance and providing accountability.

Overall, Explainable AI tools like SHAP are indispensable tools used to bridge the gap between complex ML models and human understanding. By adopting explainable AI tools like SHAP, AI/ML Engineers and Data Scientists can make better-informed decisions, refine their models, and ultimately create more transparent and reliable AI.

The transparency that SHAP provides is of great use when making someone understand complex ML model predictions and how the features affect the model’s output.

Explainable AI tools such as SHAP are impressive because they assist people in grasping how AI makes its decisions.

Explainable AI Tools: Why Use SHAP?

As technology continues to become more and more complex, and with the introduction of LLMs in AI, the interpretability of the results of the model becomes more and more difficult.

A model can be understood by its underlying math and the given architecture, but making its decision-making understandable becomes difficult.

Here comes a picture, a powerful Python module SHAP, which not only provides an interpretable way in which the model can be understood, but also provides the importance of a feature in black-box models, such as ML models. It helps in streamlining the process of fine-tuning the model.

Also, Check Out:

1. AI Chatbot: Crafting with Precision & Personality

2. AI Fairness: A Deep Dive Into Microsoft's Fairlearn Toolkit

3. AIOps: Using Artificial Intelligence in DevOps

4. Changing Businesses Using Artificial Intelligence and Drupal

How To Interpret SHAP Values?

Before going on to practical application, let us understand how Shapley Values are calculated. We have used a transformer model to predict the value (sentiments) based on features. In our case, the features are individual words in a sentence. The Shapley value for a feature (word) is the average of its marginal contributions across all subsets of features (words).

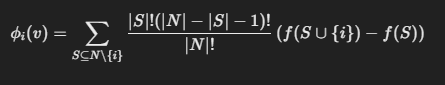

The Shapley value for feature i (or word i) in the model can be expressed as:

- Φi→ Shapley Value

- N → Set of features

- S → A subset of features excluding i

- |N|, |S| → Number of elements in N and S

- f(S) → Model prediction on S features

- f(S ⋃ {i})→ Model prediction when {i} is added to S features

Let's take an example, “OSL is a dynamic team,” which is like text comments present in the dataset.

Words in the sentence:

-

w1 = “OSL”

-

w2 = “is”

-

w3 = “a”

-

w4 = “dynamic”

-

w5 = “team”

Now, after being tokenized by our loaded model, each word in the sentence is assigned a score. Now these scores are nothing but f(x).

No,w let us see how we calculate the Shapley Value for the word “dynamic” in the above sentence.

-

Subsets without “dynamic”: Consider subsets like {w1, w2}, {w1, w3}, etc.

-

Marginal Contribution for each contribution:

For subset S = {w1, w2},

calculate:

f(S) = Prediction using “OSL is”

f(S ∪ {w4}) = Prediction using “OSL is dynamic”

The marginal contribution for the word “dynamic” is the difference: f(S {w4}) - f(S)

-

Repeat for all subsets: For each coalition of words excluding “dynamic”, calculate the marginal contribution.

-

Average the Marginal Contribution: Once you have the marginal contributions for all the possible subsets, average them to get the Shapley Value for the word “dynamic”:

-

Repeat the steps for all the remaining words to get the Shapley value for respectively.

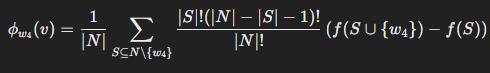

CODE:

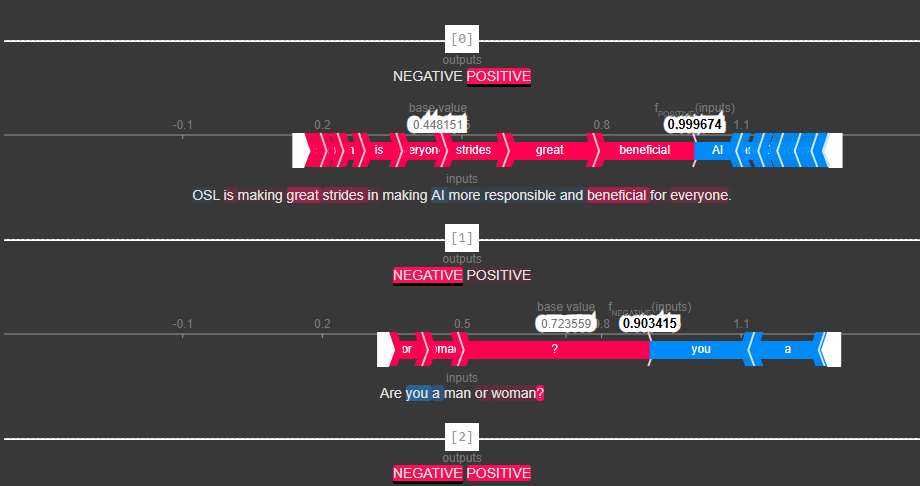

Understanding the terms in SHAP Interpretation

-

Base Value: Represents the average prediction of the model on the dataset

-

Output Value: Represent the model's final prediction for the specific text being visualized.

-

Colored Highlights: Words within the text will be highlighted with varying shades of red.

-

Red: Indicates the model's prediction towards a positive sentiment. The intensity of the red color corresponds to the magnitude of the positive contribution- a darker red means a stronger positive influence.

-

Blue: Indicates the model's prediction towards a negative sentiment. The intensity of the blue color corresponds to the magnitude of the negative contribution- a darker blue means a stronger negative influence.

-

Words not highlighted had little to no impact on the positive sentiment prediction.

![]()

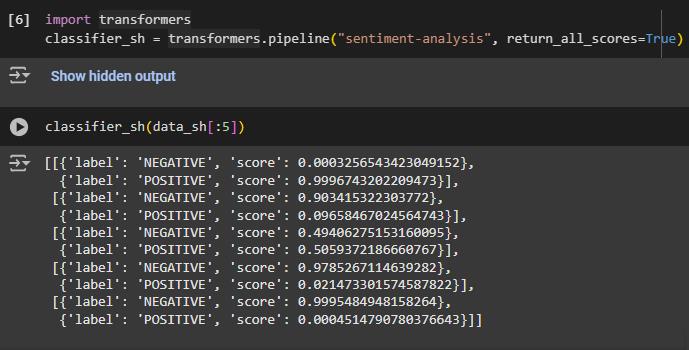

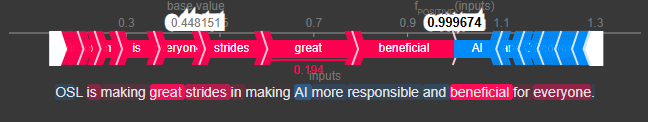

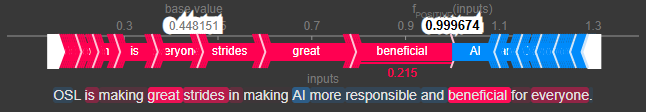

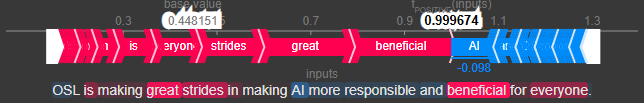

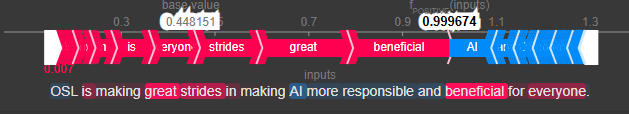

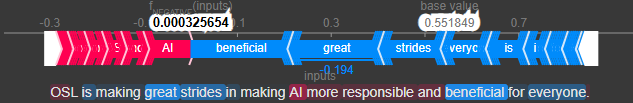

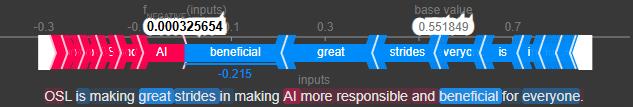

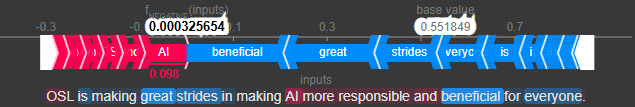

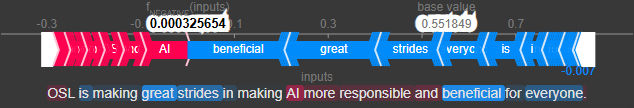

In the above text plot (a hoverable plot where one can see the contribution of each feature to the model prediction), the cursor has been placed on 4 words:

-

The first two images show a cursor placed at “great (0.194)” and “beneficial (0.215),” which contribute towards the positive nature of sentiment.

-

The third image shows a cursor placed at “AI (-0.098),” which shows how negatively the word “AI” is impacting the positive nature of sentiment.

-

The last image shows the cursor placed at “making (0.007),” which has a negligible impact on the positive nature of sentiment.

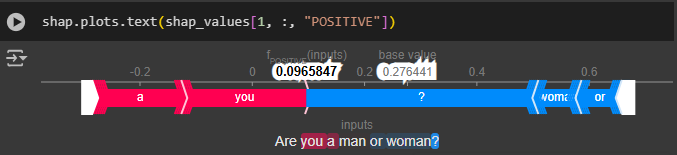

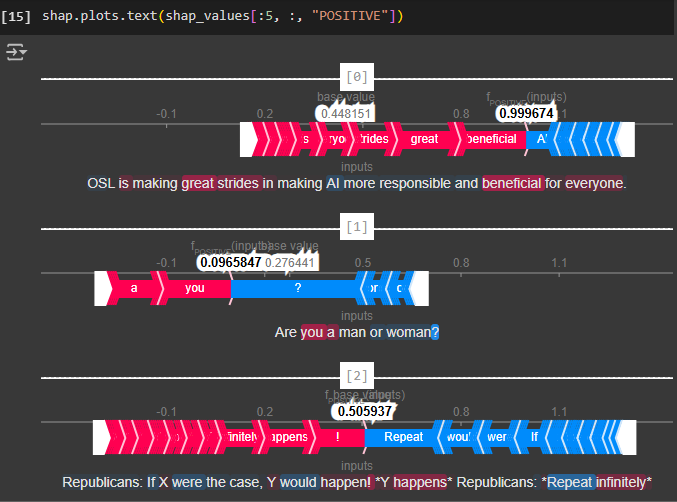

It shows a force plot specifically tailored for text data and highlights the contribution towards positive sentiment. The red-highlighted words show the words contributing to the positive impact of the sentiment, while the blue shows the negative impact. The grey line or the base value shows the model's average prediction, which is 0.448. fPOSITIVE(inputs) provides the positive score that the model has predicted for the respective text, which is 0.99. The sentiment is positive as fPOSITIVE(inputs) is greater than the base value. Similarly, we can check for all the other instances of how all the features contributing towards the sentiment are calculated by the model.

![]()

Similarly, for the “NEGATIVE” meaning, how negative a sentence is, the values are the same but just opposite in color and sign for the same words as in “POSITIVE”. It is also evident that the base value (0.55) is greater than fNEGATIVE (inputs) (0.00032), making it clear that the sentence has a high positive sentiment in nature.

We can see the text plots for the model’s prediction on other comments change the 1st parameter, which is the index where the comments are present in the data frame.

If you want to explore advanced AI services designed for your business, take a look at our offerings before continuing.

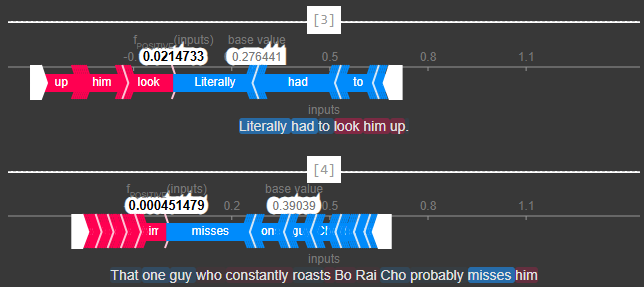

To see text plots for multiple values, we can assign a starting and ending point, just like seeing any normal data frame. Here we are plotting the 1st five comments model predictions using the text plot.

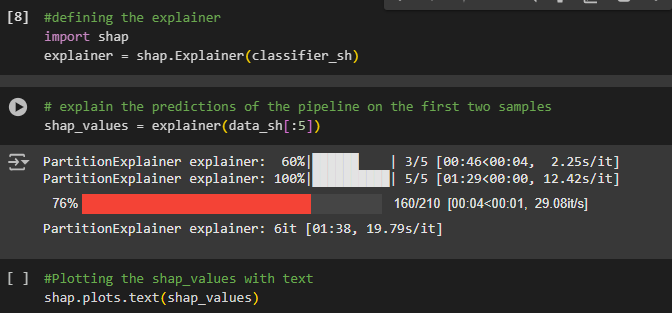

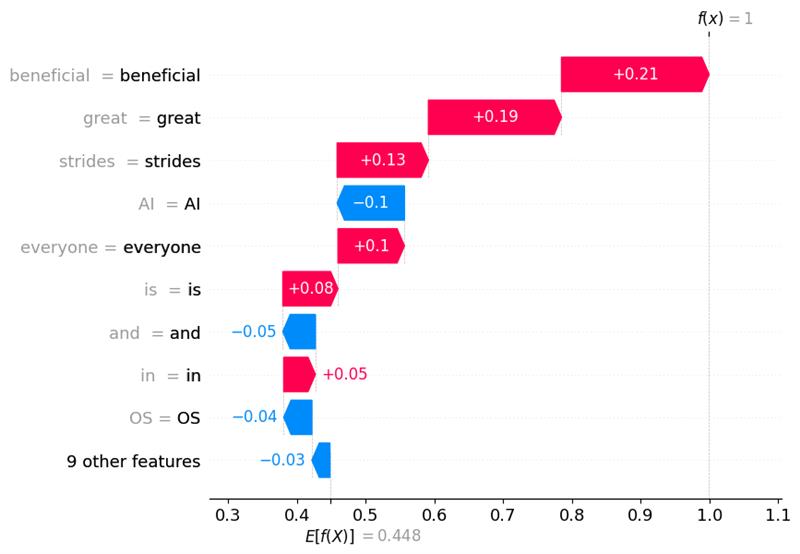

Below we have the SHAP Waterfall Plot, which mimics a waterfall flowing from top to bottom. The plot starts at the model's base value and then shows how each feature pushes the prediction higher or lower toward the final prediction for the specific comment.

-

The Base value here is denoted as E[f(x)], which is the starting point of the waterfall

-

f(x) represents the model’s final prediction value for the specific comment.

-

Each bar represents a feature in the comment and is ordered by its impact on the prediction, with the most impactful ones at the top.

![]()

The above is a waterfall plot for the “POSITIVE” nature of the sentiment as mentioned in the code above. Here, we can also see that words like “great (0.19)” and “beneficial (0.21)” contribute towards the positive nature of sentiment, while words like “AI (-0.1)” contribute towards the negative side of the sentiment. “9 other features” are the features that have the minimum impact on the model’s prediction towards the positive nature of the sentiment. Since f(x)=1 is greater than E[f(X)] =0.45, it shows that the sentiment has a highly positive nature.

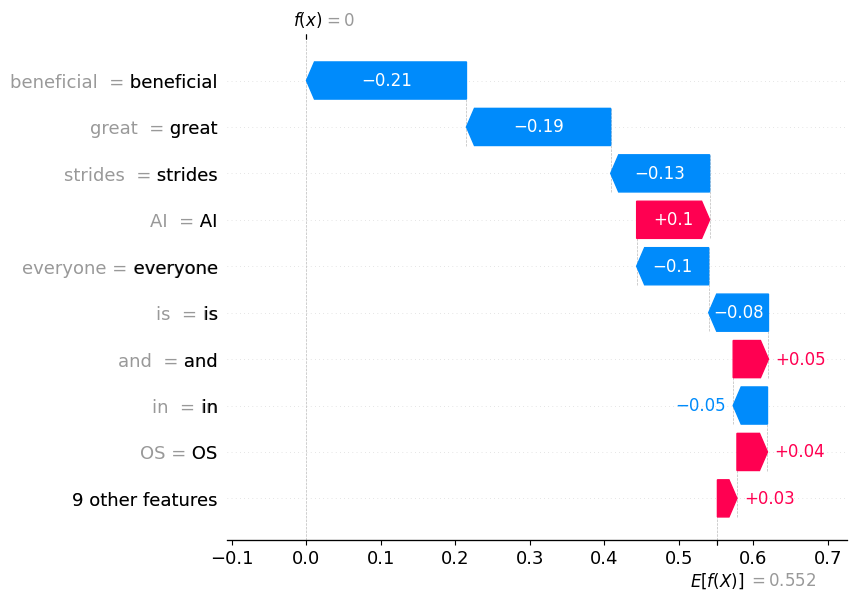

Similarly, for the “NEGATIVE” nature of the sentiment, the graph is just the mirror image of “POSITIVE”, and as f(x)=0 is smaller than E[f(X)] =0.55 shows that the sentiment is positive.

![]()

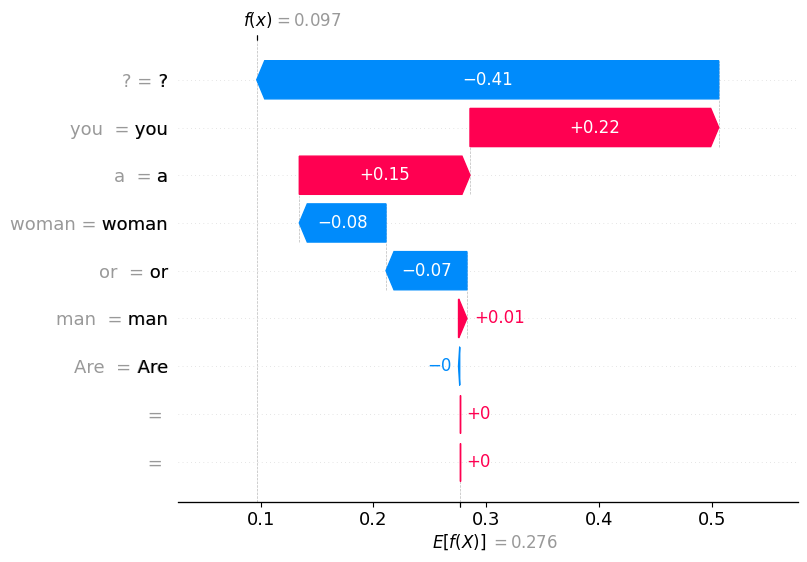

To analyze how the model reached its prediction for other comments, we just need to change the first parameter, which is the index at which the comment is present in the Data Frame, to see the waterfall plot for the same prediction.

![]()

Overall, the SHAP waterfall plot helps us to see why the model made a specific prediction, providing insights into the features driving sentiment decision-making.

Also, Check Out:

1. Top 2018 Drupal Modules using Artificial Intelligence

2. Ecommerce And Data Analytics: Increase Your Sales

3. What Is A Content Management System: Top 10 CMS For 2025

4. What Is A Headless CMS: Explained

Key Takeaways

- SHAP (SHapley Additive exPlanation) is an effective and popular technique for clarifying how machine learning models work.

- A model can be explained by its basic math and structure, but as the model becomes more complex, it becomes harder to understand how it makes decisions.

- The SHAP Waterfall plot begins with the model's base value and illustrates how each feature affects the prediction, either increasing or decreasing it, leading to the final prediction for that particular comment.

- The SHAP waterfall plot shows us the reasons behind the model's prediction, giving us a clearer view of the features that influence the sentiment decision.

- As technology grows increasingly complex and LLMs are introduced in AI, understanding the model's results becomes harder.

Subscribe

Related Blogs

Drupal's Role as an MCP Server: A Practical Guide for Developers

"The MCP provides a universal open standard that allows AI models to access real-world data sources securely without custom…

What’s New in Drupal CMS 2.0: A Complete Overview

"Drupal CMS 2.0 marks a significant change in the construction of Drupal websites, integrating visual site building, AI…

Drupal AI Ecosystem Part 6: ECA Module & Its Integration with AI

Modern Drupal sites demand automation, consistency, and predictable workflows. With Drupal’s ECA module, these capabilities…