AI Fairness: A Deep Dive Into Microsoft's Fairlearn Toolkit

Artificial intelligence (AI) has changed the game across industries, especially in financial services. From automating credit risk assessments to streamlining loan approvals, AI has brought incredible efficiency. But with great power comes great responsibility—and AI fairness is one of the biggest responsibilities AI developers face. Microsoft and EY’s white paper on the Fairlearn toolkit dives deep into this issue, offering practical tools and insights to tackle bias in credit models.

And if you want responsible AI services such as AI-driven search and personalization, take a look at the responsible AI services from OpenSense Labs before moving forward.

AI Fairness: The AI Fairness Dilemma

Imagine you apply for a loan and get rejected.

It feels unfair, but what if the decision came from a biased algorithm?

That’s the crux of the problem.

Financial services rely on predictive models like Probability of Default (PD) to assess creditworthiness. However, these models can unintentionally replicate societal biases baked into historical data.

For example, a PD model might reject male applicants more often, even if they’re creditworthy, or approve female applicants at higher rates but leave them struggling with repayments. These patterns reveal systemic unfairness that tools like Fairlearn aim to fix.

The historical roots of such biases go beyond algorithms. Human decision-making has long suffered from implicit biases. In lending, for instance, racial or gender disparities in credit approvals have existed for decades. While AI offers a chance to standardize decisions, it also risks perpetuating these biases unless AI fairness becomes a core design principle.

Also Check Out:

1. AIOps: Using Artificial Intelligence in DevOps

2. Changing Businesses Using Artificial Intelligence and Drupal

3. Top 2018 Drupal Modules using Artificial Intelligence

4. Digital Marketing Trends: AI vs Human Copywriters

AI Fairness: The Fairlearn Toolkit

Fairlearn is an open-source Python toolkit designed to assess and mitigate fairness-related harms in AI systems. It focuses on group fairness-ensuring that outcomes do not disproportionately harm or favour specific groups based on sensitive attributes like gender, age, or race. Here’s a closer look at its core components:

1. AI Fairness Metrics

Fairlearn provides a suite of AI fairness metrics to evaluate models for both classification and regression tasks. These metrics allow developers to quantify AI fairness in terms of:

- Demographic Parity: Makes sure selection rates are consistent across groups.

- Equalized Odds: Balances the rates of correct and incorrect classifications across demographics.

- True Positive Rate Parity: Ensures that qualified individuals are treated equally, regardless of their group.

For instance, Fairlearn can analyze a loan dataset to see if false rejection rates (False Positive Rates or FPR) differ between male and female applicants. If the rates are unequal, that’s a red flag for bias.

To better understand, consider a hypothetical scenario in healthcare: A predictive model recommends treatment plans. If one demographic consistently receives less aggressive treatments despite equal medical needs, the model would fail AI fairness tests like Equalized Odds. Fairlearn’s metrics help identify such disparities.

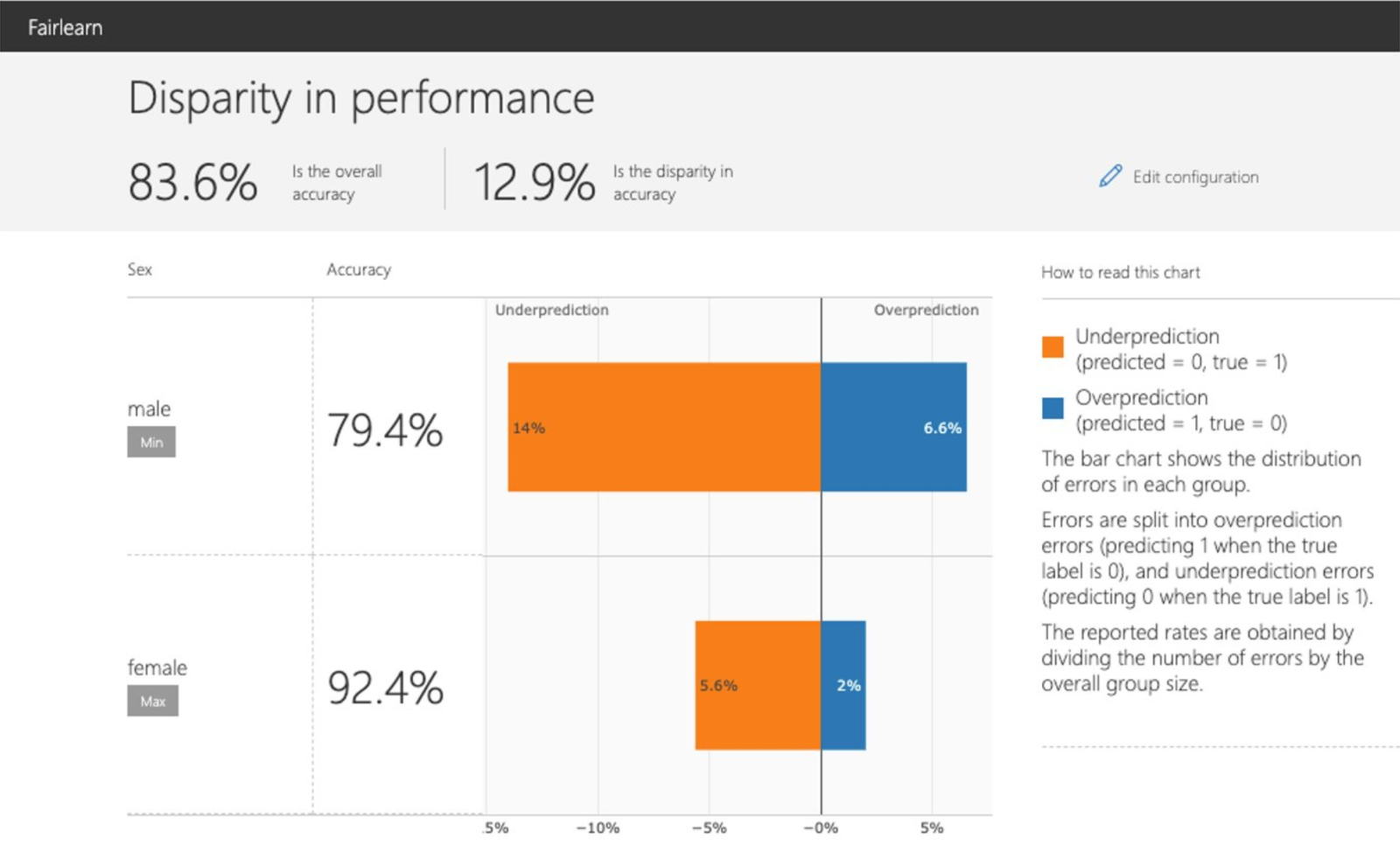

2. Interactive Visualization Dashboard

Fairlearn’s dashboard is a lifesaver for making sense of AI fairness metrics. It allows you to:

- Choose sensitive attributes like gender or age for AI fairness assessments.

- Compare how different models perform on AI fairness versus accuracy.

- Visualize how outcomes vary across demographic groups.

This feature makes it easier to spot trade-offs and communicate findings to stakeholders. The intuitive design ensures even non-technical users can grasp the implications of AI fairness metrics, fostering collaboration between technical teams and decision-makers.

Imagine presenting an AI fairness report to non-technical stakeholders—the dashboard’s clear visuals bridge the gap, ensuring everyone understands the impact of bias and the steps needed to mitigate it.

3. Unfairness Mitigation Algorithms

Fairlearn provides two types of algorithms to address bias:

- Postprocessing Algorithms: These adjust the predictions of an already-trained model. For example, the ThresholdOptimizer sets group-specific thresholds to reduce disparities without retraining the model.

- Reduction Algorithms: These iteratively re-weight training data to create fairer models. Tools like GridSearch and ExponentiatedGradient allow you to balance AI fairness and performance while avoiding the need to access sensitive attributes during deployment.

The flexibility of these algorithms ensures they can adapt to various business and legal requirements. Moreover, they offer organizations the ability to test multiple AI fairness-performance trade-offs before deploying a model.

For a recruitment application, for instance, these algorithms can ensure equal opportunity for underrepresented groups without compromising on selecting the most qualified candidates.

AI Fairness: Fairlearn In Action (Loan Adjudication Case Study)

The whitepaper’s case study demonstrates Fairlearn’s potential using a dataset of over 300,000 loan applications. The dataset includes sensitive features like gender and age, and the goal was to predict the probability of default (PD). Let’s break down the findings:

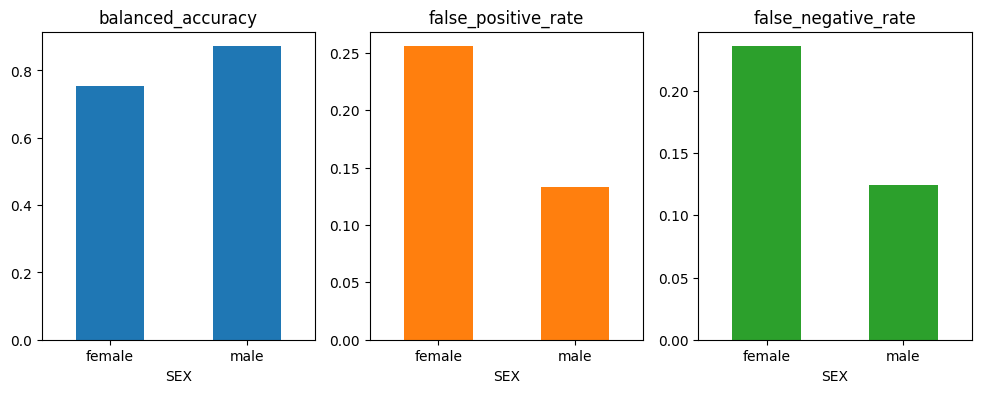

Initial Model Performance

A baseline PD model trained using LightGBM, achieved high accuracy but revealed significant AI fairness gaps:

- False Positive Rate (FPR): Male applicants faced higher rejection rates, even when they were creditworthy.

- False Negative Rate (FNR): Female applicants were more likely to default after approval.

- Equalized Odds Difference: There was an 8% gap between groups, signalling bias.

These findings highlight how seemingly high-performing models can harbour deep inequities, underscoring the need for AI fairness assessments as a standard practice.

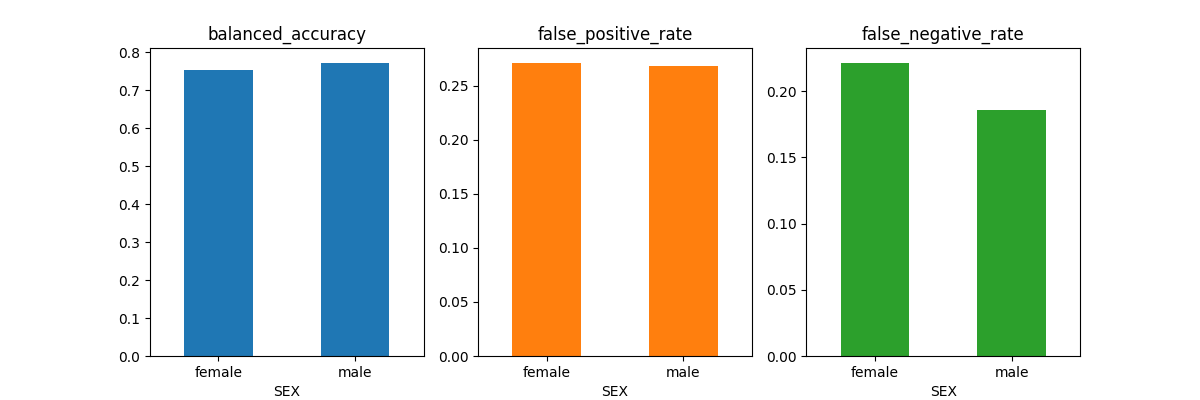

AI Fairness: How Fairlearn Helped?

By applying Fairlearn’s mitigation tools, the team achieved remarkable results:

1. ThresholdOptimizer: This postprocessing approach cuts the Equalized Odds Difference to 1% while maintaining overall accuracy.

2. GridSearch: This reduction algorithm achieved similar AI fairness improvements without needing sensitive attributes during deployment.

The result?

A fairer model that didn’t compromise business goals. The Fairlearn toolkit empowered the team to navigate AI fairness-performance trade-offs effectively, demonstrating that ethical AI practices are both achievable and practical.

This case study underscores a critical lesson: AI fairness tools like Fairlearn should be integrated into model development pipelines rather than being an afterthought. Proactively addressing bias not only avoids potential legal pitfalls but also builds trust with end-users.

AI Fairness: Navigating Trade-offs With The Human Element

Fairlearn’s tools are powerful, but AI fairness isn’t just about metrics and algorithms. It’s a sociotechnical challenge that requires:

- Transparency: Clearly communicating trade-offs and their implications.

- Ethical Oversight: Working with legal experts to ensure compliance with anti-discrimination laws.

- Continuous Monitoring: Regularly revisiting models to ensure they stay fair as societal norms evolve.

AI fairness isn’t a one-and-done task. It’s an ongoing commitment to doing the right thing. Organizations must foster a culture of accountability, where AI fairness assessments are integrated into every stage of the AI lifecycle.

Moreover, collaboration across departments is key. Data scientists, ethicists, legal teams, and business leaders must work together to balance technical feasibility with ethical imperatives. Open discussions on AI fairness can uncover blind spots and lead to more holistic solutions.

Before we proceed further, if you're looking for responsible AI services like AI-driven insights and integration, check out the responsible AI offerings from OpenSense Labs.

AI Fairness: Why Fairness Matters?

This isn’t just about avoiding lawsuits or meeting regulations. It’s about trust. Financial services impact lives in profound ways, from granting opportunities to ensuring security. By embedding AI fairness into systems, organizations build credibility and contribute to a more equitable society.

Unfair AI systems risk eroding public trust, which can have far-reaching consequences. In an age where transparency and accountability are increasingly demanded, AI fairness becomes a competitive advantage. Companies that prioritize AI fairness are better positioned to earn customer loyalty and maintain their reputations.

Fairlearn shows that AI fairness doesn’t have to come at the expense of performance. It’s a toolkit for organizations ready to lead the way in ethical AI. Beyond financial services, the principles and tools of Fairlearn can be applied to healthcare, hiring, education, and countless other domains where equitable decision-making is critical.

For example, in hiring, Fairlearn can ensure that qualified candidates from underrepresented groups aren’t overlooked due to historical biases in recruitment data. In education, it can help ensure equitable access to resources like scholarships or advanced learning programs.

Also Check Out:

1. API Documentation Tool: 10 Best Tools For 2025

2. Drupal Debug: Effective Techniques And Tools

3. Every Tool You Could Need for the Drupal 9 Upgrade

4. 9 Tips And Tools To Become A More Productive Web Developer

AI Fairness: Practical Steps for Integrating Fairness

For organizations looking to adopt AI fairness-focused practices, the following steps can help:

-

Start Early: Incorporate AI fairness assessments during the data collection and preprocessing stages. Bias in raw data can propagate through the entire pipeline.

-

Educate Teams: Ensure everyone involved in AI development understands the importance of AI fairness and the tools available to achieve it.

-

Leverage Toolkits: Use Fairlearn and similar frameworks to evaluate and mitigate bias throughout the AI lifecycle.

-

Engage Stakeholders: Include diverse perspectives in discussions about AI fairness to ensure the solutions address real-world concerns.

-

Iterate and Improve: Treat AI fairness as a continuous goal rather than a fixed milestone. Regularly update models to reflect changes in societal values and expectations.

Embedding these steps into organizational practices builds a foundation for responsible AI development. Beyond technical fixes, fostering a culture that values AI fairness ensures sustainable progress.

Key Takeaways

Microsoft and EY’s white paper is more than a technical guide; it’s a call to action. Fairlearn proves that fairness is achievable without sacrificing performance. By embracing tools like this, organizations can build AI systems that are accurate, responsible, and fair.

At OpenSenseLabs, we take fairness seriously in every project we undertake. We understand the profound impact our models have on individuals and communities, and we make it our mission to prioritize equity and transparency. Tools like Fairlearn play a key role in helping us build systems that are not only efficient but also equitable and responsible.

As we move forward, fairness in AI will only grow in importance. By adopting best practices and leveraging innovative tools, we can ensure AI serves as a force for good, empowering individuals and creating opportunities for all.

Subscribe

Related Blogs

API Documentation Tool: 10 Best Tools For 2025

A Google search for ‘Best API Documentation Tool’ will show many results. The growing number of API documentation tools…

Debunking 6 Common Software Testing Myths

A flawless product delivery requires a perfect combination of both development and testing efforts. Testing plays a vital…

With Ant Design, Create React Components Like a Pro

Most enterprise-grade solutions depend on the React stack to create a robust platform for improved delivery and performance…