Rapid and efficient delivery of a software system to the customers is vital if an organization wants to establish an indelible presence in the market. A continuous deployment process with no interruptions makes the difference. The companies acquiring a top position in the distributed systems chart perform thousands of deploys in a day without breaking. Netflix and Expedia are two such elite performers of the market.

What is that makes the few enterprises to march ahead in the game? No doubt the process of continuous delivery varies from organizations to organizations. The better performers follow a set of practices to maintain their continuous delivery (CD) cycle intact. What experiments can be done to the CD processes so as to get the maximum output?

James Governor, the founder of RedMonk, put forward a new term called Progressive Delivery at QCon London (2019) that ensures the strength and flexibility of a system through numerous creative experimentations.

Let’s dive deeper and explore, from where the term progressive delivery evolved, what it means and how to adopt it into your businesses. But first the history.

Progressive delivery ensures the strength and flexibility of a system through numerous creative experimentations

Evolution of Progressive Delivery

There has been a progression of development from the Waterfall model to Progressive delivery. Let us have a look at how a series of events shaped the path for progressive delivery.

Background

The software development process has evolved along with the evolution of coding languages. These changes took place gradually with the changes that happened in software delivery mechanics. Earlier, physical hardware devices, like compact disks (CDs) were purchased in order to get hands-on new software. To address this delivery model, software development was designed to accommodate long cycles mapping to the hardware designs, fabrications, and validation intervals. Waterfall accompanied this staged process very well.

Further, the decoupling of the software from the hardware led to an all-round shift in the thinking of software delivery to offer the best software solutions. This contemplation leads to a realization that development cycles can move faster than the hardware cycles. This philosophy was incorporated into the waterfall delivery model to get feedback through beta testing in the validation stage. The fundamental objective was to enhance customer value. But it limited the changes as the cycle didn’t start until the validation phase.

Bringing Forth the Agile

The feedback and change incorporation led to the most fundamental premise of Agile and Scrum delivery models. Both these models were based on the idea of having a set of actions or plans and then breaking them into small changeable tasks or stories so as to attain the proposed goal. The minor corrections during the development of a complete software were the benefit the agile and scrum delivery model brought along with it. Meanwhile, one of the two choices were made by the waterfall model, first either stay on the plan and wait for the next cycle or abandon work that is no longer relevant.

The industry also started evolving and the software delivery models shifted from packaged software to Software as a Service (SaaS). The movement of the application in the cloud changed the dynamics of software delivery.

Mostly, physical delivery was ceased which enhanced the feasibility of the software and updates were made at any time or at any place. The delivery of the updates by the development teams and their immediate consumption by the consumers streamlined the continuous delivery model. However, immediate updates mean immediate security risks. In addition to that, finding bugs always took longer than fixing bugs.

‘Flag’ the Code

Migration to a continuous delivery model required an infrastructure that could reduce the risk of shipping hazardous code. ‘Feature flagging’ came to the aid by separating code deployment from the actual feature release.

Then there came a major architectural shift in the industry. The advent of microservices with globally distributed apps and data science entered the application delivery process. These changed the ways for the teams to deliver software once again. The changes or updates were first distributed to the specific section of people and their impacts were seen. Few considerations or changes were required prior to the rolling out of the updates or changes. In any case, the development and operations teams started looking into the data science teams on how these partial rollouts were being received and adopted.

The Realm of Blast Radius

Progressive Delivery was conceived with a notion that the release of features or updates is directed in such a way that it manages the impacts of change. Let's say, the new code release is the epic-center. Each new division that is exposed represents the increasing blast radius spreading out from that initial impact.

A system is required to manage the separation of code deployment from the feature releases, as well as controlling the release, like a valve or gate that can be opened and closed slowly. In addition to this, control points are needed to get the feedback (event) data about how various features are accessed and consumed. Progressive delivery emerged as a cultivated approach for the teams following the continuous delivery pipeline. It led them to leverage the benefits of costs, tools, and continuous delivery mechanics.

What is Progressive Delivery?

Progressive delivery is the technique of delivering the changes to small, low-risk audiences first and then expanding it to the larger and riskier audiences. For example, first, the changes are rolled out to 1% of users and then progressively are released to more users (say 5% or 7%) before doing the complete delivery.

Environments and users are the two corresponding factors of progressive delivery. In the case of environments, a user can deliver progressively into environments using canary builds or blue-green deployments to limit exposure when new code is deployed. When done for the users, progressive delivery limits the changes exposure to the users.

At each stage of a progressive delivery, the metrics are maintained and the health of the application is measured. If anything goes wrong, the application is automatically rolled back to its previous stable state.

Elements of Progressive Delivery

James Governor proposed the four building blocks of progressive delivery:

- User segmentation

- Traffic management

- Observability

- Automation

Advantages of Progressive Delivery

IT professionals who choose the path of progressive delivery avail the following major benefits.

- The capability to quality control the features as they are distributed.

- The ability to plan for the failures or issues as only a small number of users are affected if the features don't work correctly.

- It facilitates the feedback collection from a group of expert users to revamp the changes before distributing them out to the client.

- Progressive delivery allows development teams to add more value to the development process and less time in managing the risks.

- The non-engineering teams, such as marketing or sales, can release the features to the users based on separate business timelines.

Progressive Delivery Sore Points

For the purpose of making the process of progressive delivery seamless, always keep an eye on the below-mentioned points. These are called as Google’s Golden Signals.

- Latency: The time taken by an application to service a request.

- Traffic: It can be calculated by monitoring HTTP requests per second.

- Errors: The rate of failed requests made by an application or a feature.

- Saturation: The state where a system reaches its full extent, that is, measuring the load in the application or system.

Approaches to Bring Progressive Delivery in an Application Development Process

The progressive delivery exercise can’t be performed as haywire. A set of practices are followed to achieve progressive delivery in an application development environment. Below is the list of such methods:

- Canary Testing: When a change is released to a small number of users, a small percentage of users get impacted if any bug is released. Canary tests are the minimal tests that do the quick verification of the things to know if everything in which a user has the dependency is ready or not.

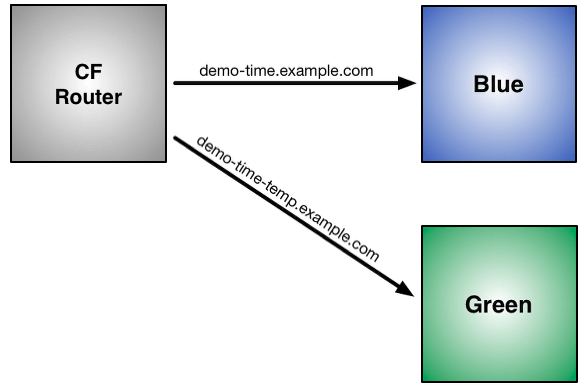

- Blue-Green Deployments: In blue-green deployment, the new service or any update is rolled out into one (blue) production environment and then is shifted to the other (green). This reduces the downtime and the risk by running the two identical production environments. But at a time only one of the two environments is live. The live environment serves the production traffic and allows the user to test things in the production by quickly rolling back to the other if something goes wrong in the environment that is live.

- A/B Testing: A/B testing allows the testing of variables on two different groups of data on the user’s interface and then see which one is performing better. This is efficient to test the change on usability, anything that can affect the conversion.

- Feature Flags: Also termed as feature toggles, it allows the hiding, enabling or disabling of a small feature at its run time, hidden from the user’s interface.

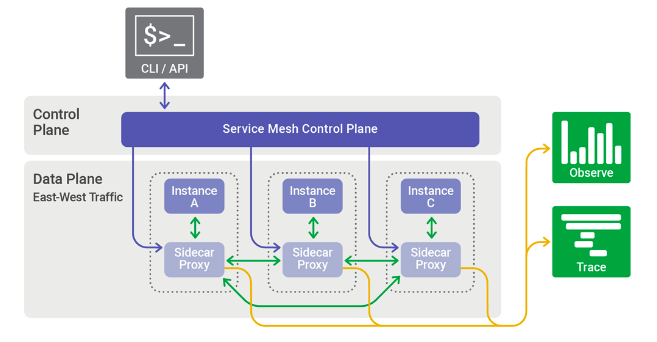

Source: Medium/Sicara - Service Mesh: When placed in between the containers and services, a service mesh remembers what worked last. This helps in retrying a call if it fails or to revert to the last response that is available. Furthermore, canary routing is also facilitated to a specific user section and to perform failover with an ability to orchestrate services with advanced service routing and traffic shifting.

Source: Nginx - Observability: Observability encapsulates tracing, logging, and metrics maintenance that allows developers to build new services with a strong view of how the management will be done in the production.

- Chaos Engineering: It is an art of adopting a failure by defining what is a normal state for a particular distributed system and then by any means solving the problem area or issue through actual resistance testing.

Tools to Perform Progressive Delivery

In order to perform the progressive delivery at ease following tooling is recommended:

- WeaveWorks: It is a Kubernetes operator which automates the promotion of canary deployments using routing for a traffic-shifting tool.

- SwitchIO: SwitchIO is a fast asynchronous control system that facilitates the understanding of error patterns in clusters.

- Petri: A Product Experiment Infrastructure (Petri) is a comprehensive experiment system from Wix’s that uses A/B testing and feature toggles.

- LaunchDarkly: The tool is used for feature management with automated feature toggles that depend on the user personas.

Final Note

To conclude, Progressive Delivery can be defined as a process aligning user’s and developer’s experience. Usually referred to as the next step of continuous integration and continuous delivery, progressive delivery iteratively pushes changes into the production.

What’s your opinion on the same? Share your views on our social media channels: Facebook, LinkedIn, and Twitter.

Subscribe

Related Blogs

Trek n Tech Annual Retreat 2025: A 7-Day Workcation of OSL

OSL family came together for the Trek n Tech Annual Retreat 2025, a 7-day workcation set amidst the serene beauty of…

Exploring Drupal's Single Directory Components: A Game-Changer for Developers

Web development thrives on efficiency and organisation, and Drupal, our favourite CMS, is here to amp that up with its…

7 Quick Steps to Create API Documentation Using Postman

If you work with API , you are likely already familiar with Postman, the beloved REST Client trusted by countless…