For some reason, you aren’t getting good traffic to your site. Before diving into an intensive content review, consider that you may have made a great website for people, but you haven’t caught the attention of search engines.

What and Why Is SEO So Crucial?

Search engine optimization is a set of rules and practices which help business owners escalate their online reputation.

Say, someone who owns a hiking gear business and has 100 rivals in the same region, s/he can follow certain optimization rules to get ahead of everyone in Google results for hiking gear, eventually establishing a firm position in their respective market and catching numerous eyes.

That’s alright but here is more:

- 10/10 people who look for products or services online mostly trust the first 6 results, because, from a consumer standpoint, Google does justice to the best vendors. Think for a while, in the early 2000’s if you had to call a technician, you would choose someone at the beginning of your telephone directory rather than flipping two pages.

- SEO has one of the best ROIs in advertising, it rewards you ten times than what traditional advertising practices can. You don’t have to allocate revenue to pay for square meters of space on a billboard or pay for seconds of television airtime. Traditional is the least efficient sometimes, from my standpoint. Practicing fair search engine optimizations advertises you to the exactly right flock of sheep.

- Over this decade, marketing strategies and practices have absolutely pivoted to online. 82 % of marketers believe SEO has overhauled advertising while enabling precision. Also, 72% of customers who did a local search for products online, visited the store within 5 miles. (Source: web strategies)

- In 2018, the average firm is expected to allocate 41% of their marketing budget to online, and this rate is expected to grow to 45% by 2020. (Source: web strategies)

I am pretty sure no other SEO statistics will persuade you to advertise the 2017 way.

Does your Content Management System Matter?

Your CMS plays the catalyst when it comes to search engine optimization. Whichever you might be running on right now, you should know that not every CMS is designed with search engine optimization in mind. In fact, they are constructed on dynamic databases which are not all SEO-friendly. Picking the wrong one can lead to multiple costly pitfalls in the road ahead.

What should it necessarily provide?

Ability to customize page titles and metadata. It should make you able to customize fields like page titles, metadata, meta keyword tag, and the H tags. Also, it should be capable of automatically populating these respective fields as per SEO norms and best practices. For example, auto-generation of meta descriptions while keeping them within 150 characters.

Breadcrumb Navigation

Navigational drop-down menus are too crucial internal link structures, silently contributing to search engine optimization. They establish relevancy and hierarchy across your website to help search engines index them in from the beginning of time. It should also provide easy customization of navigation menus.

The point is to not have JavaScript based navigation menus in your CMS as Google and many search engines face issues with them.

Contextual and Readable URLs

The biggest issue with CMS systems is that they only produce one unique URL for any given page of content. For example, if you have a product page where the URL is dependent on the navigation path, you may have issues if the product appears in more than one category.

Website Search

It is a great add-on to SEO and user experience throughout the website.

You can monitor which keywords are being searched for on your website by visitors. Which proves to be helpful improving the content variety and accessibility on your website. Researching over these keywords can help you strategize your content. Not only the search feature, but it should be blazing fast in function.

Control content Duplicacy

Your CMS should allow you to choose what to index and what to archive. Sometimes, you might have two articles being somewhat identical to each other, which might be taken for a duplicate by search engines. It should also signal to search engines that archive sections or category pages that often contain very similar content are evident and coded properly.

While there are a whole lot of things, depending on the scale of your business, you should make sure your CMS facilitates you these necessary items.

Since Drupal was built with SEO in mind, some functionalities come in by default and some can be integrated into the platform with time. Drupal is reportedly the best search engine friendly content management system. The Drupal community also provides a checklist to analyze the health and SEO of your Drupal website.

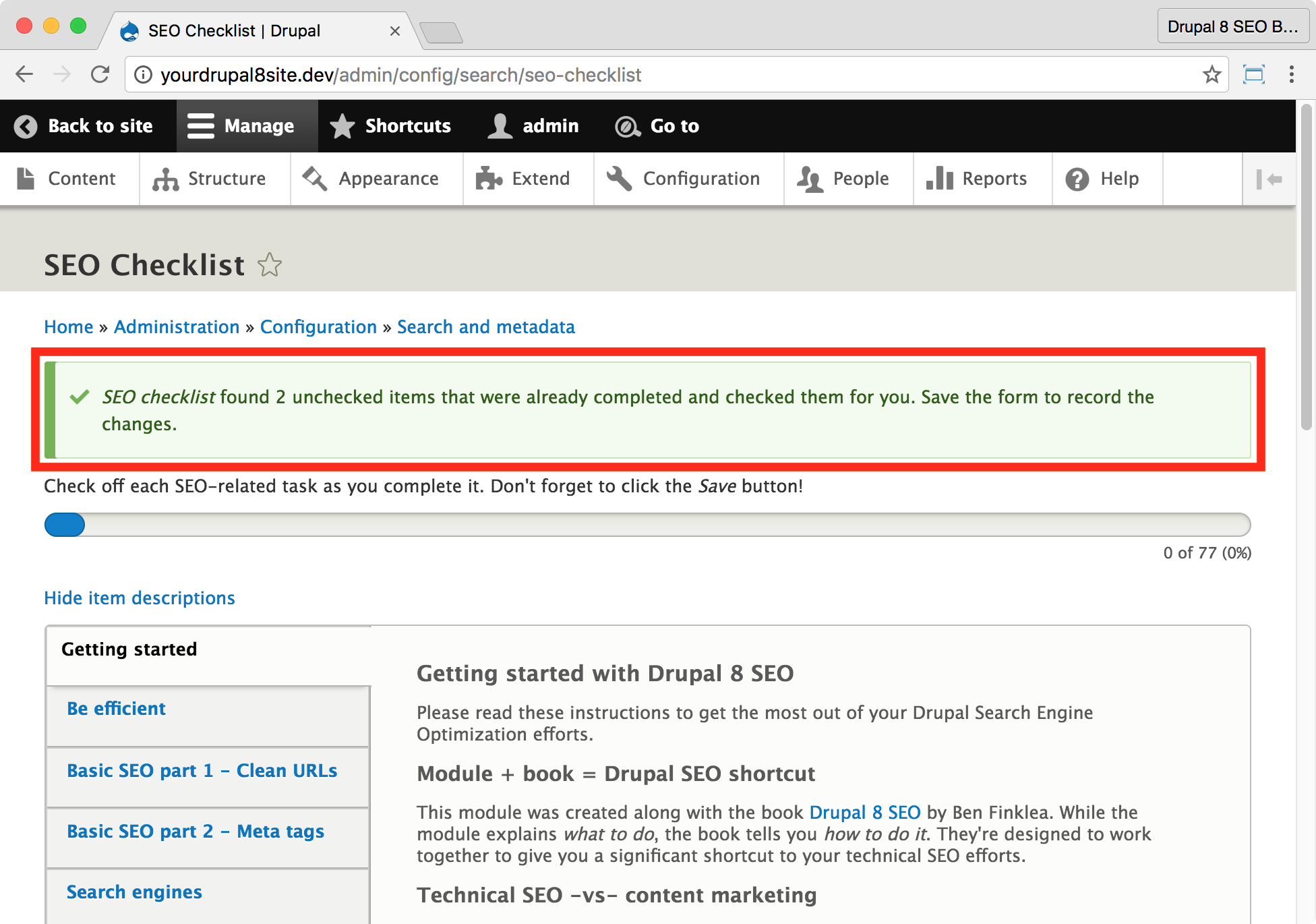

Drupal SEO Checklist

“Drupal SEO checklist is the most powerful Drupal module that does nothing". - Robert Shea, IBM

The Drupal SEO Checklist uses best practices to run a thorough check on your website's search engine optimization capabilities. It creates a todo list of functional modules and tasks which need to be undertaken to enhance the website's SEO. It is also regularly updated by the community to provide you with the best trends and fresh practices to make search engine optimization a hassle-free job.

It breaks the tasks down into functional needs like Title Tags, Paths, Content and much more. Next to each task is a link to download the module from D.o and a link to the proper admin screen of your website so that you can configure the settings perfectly.

It is wonderful to have such a programme which also tracks already undertaken activities along time proper date and time record.

SEO More Modules To Elevate Your Drupal Website

Time is for SEO modules you must immediately implement on your Drupal website to make it super search engine friendly.

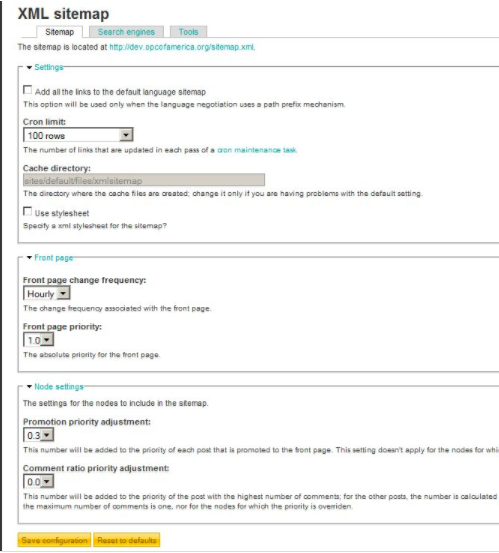

XML Sitemap

Drupal XML sitemap module once installed will automatically provide your website a sitemap and make it recognizable by search engines. Search engines will find it easy to crawl your website in a tree sort of manner.

Usually, Drupal property owners forget or underestimate having a sitemap. Instead, they should understand how important it is to make search engines understand the hierarchy of any website.

XML Sitemap In Action

The best part being, you have the option to include or exclude certain pages from the sitemap of your website, meaning, the pages you don’t care about anymore and there is no need for them to be indexed.

Pathauto

While URLs play a fair role in your search engine optimization efforts. Drupal has a URL aliasing option to help automatically define a neat URL structure which conveys the context of the landing page, you can find this facility in Drupal core.

URL aliasing ensures you communicate something to the visitors instead of something which is far from their level of understanding.

For example - opensenselabs.com/node/92 conveys nothing. Instead, URLs like "www.opensenselabs.com/blog/articles/drupal-8-seo-guide" makes absolute sense of the user.

Pathauto module automates the manual URL structuring procedure, things get easier for website administrators or editors who publish in bulk every week.

Redirect

Redirect happens to be one of the best Drupal SEO modules. I suggest every Drupal property owner have it. Redirect prevents users from landing on a page showing 404, this happens when the content has been shifted to a new URL.

The redirect module allows you to guide Drupal to transfer the traffic approaching opensenselabs.com/article to opensenselabs.com/blog/article. Smooth.

Also, having expired or dead links hanging along with your website is never a good search engine optimization practice. The Redirect module not does direct traffic to the fresher URL but also keeps count of people hitting redirects so that you get an estimate of how demanding a removed or renamed landing page is on your website.

Global redirect

The landing page you relocated to another search engine friendly URL, unfortunately, retains the older one as well. The search engine crawlers find these two as identical landing pages with two URL structures, which will never help you get into their good books and stamp your website for duplicate content.

Global redirect helps you overcome this burdening problem. On the left hand, it also keeps a track of all the broken pages and redirects page requests to your home page ensuring a fine visitor experience and good record with search engines.

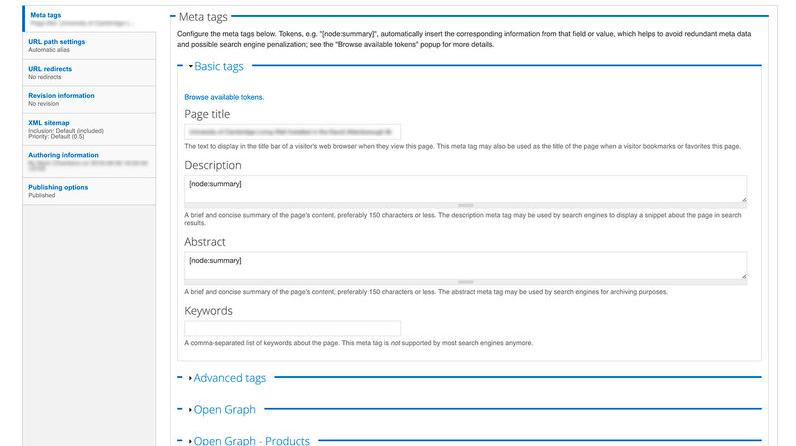

Meta tag

The meta tag module lets you automatically add meta descriptions and meta keyword tags to your nodes. This proves to be a lot helpful when your websites release content frequently every day.

Several website owners take the unfair practice and stuff keywords into their meta tags to boost search engine results rank but fortunately, that doesn’t matter anymore, you can provide the right summary and tags along with your geographical location as well. The best helpful meta escalates to the top.

For example, your meta will automatically be generated with the help of your node summary and your page title will play your title tag.

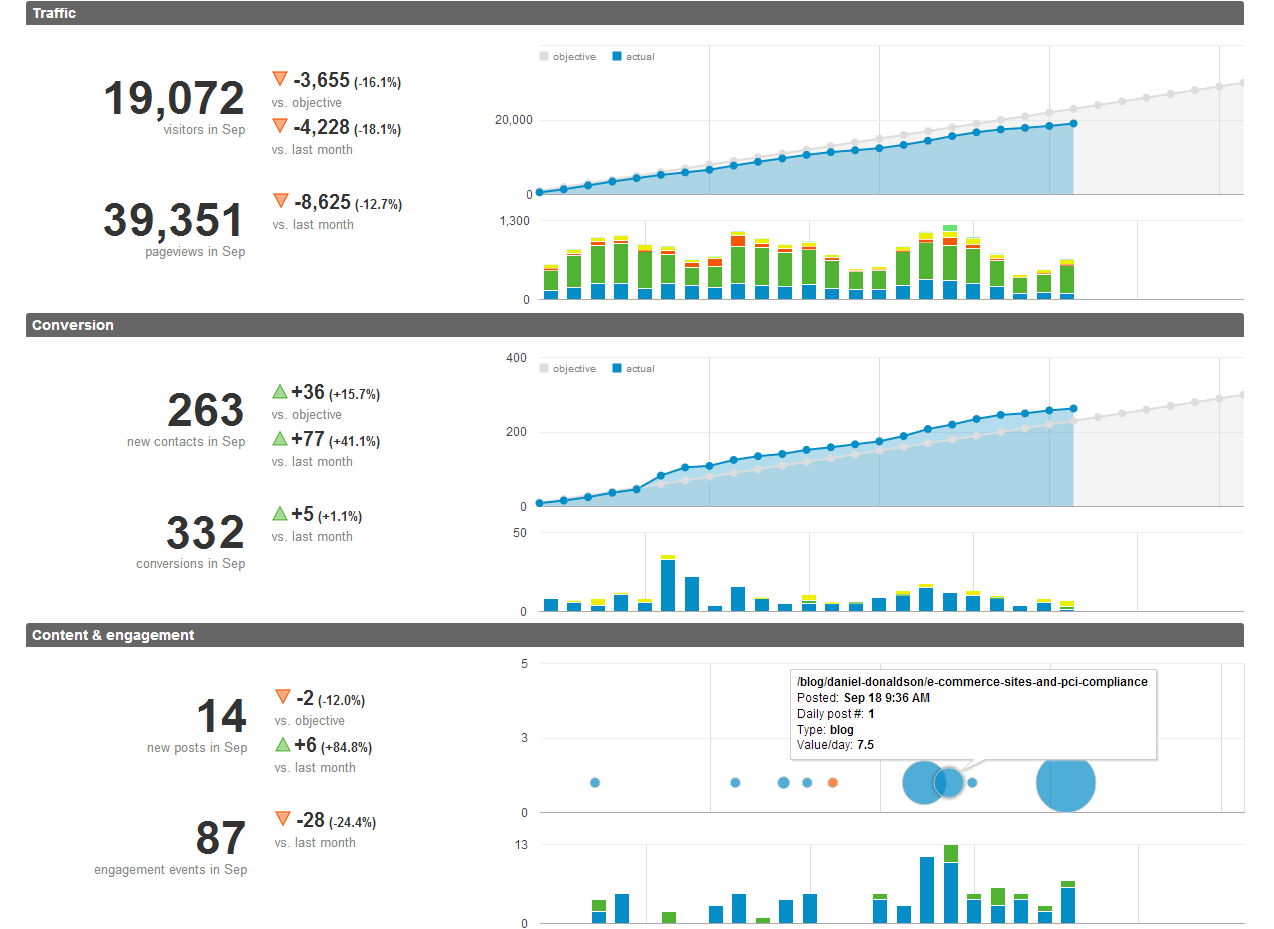

Google Analytics

The Google Analytics Module helps you keep track of every landing page and user behavior flow. It also enables you to eliminate the tracking of in-house personnel who might be visiting the website very often and could be counted as visitors and unique sessions.

It is a great integration to have when you have on your website. However, to take full advantage of it, you are required to use the available web application.

SEO Compliance checker

This module runs a thorough check each time you create a new node or made minute edits to the existing one. This check re-run is to make sure that every SEO element on the newest node is following the right practice and is fully search engine optimized.

You can even enhance it further by devising a set of new rules it should check for in nodes using compliance checker API.

Site verification

Proving your legit identity on the web is necessary these days as there are numerous spammers spread all over for notorious reasons. The site verification module allows you to get your site verified without the usual hassle.

Now, these modules prove to be extremely necessary when you plan to use numerous webmaster applications. All it makes you do is upload a verification file and it takes care of the authentication procedure from there on.

Speed and Security Matter

Your website's Speed and security parallelly control the Search engine's rank. As search engines are more driven towards swift and secure websites. Implementing best SEO practices isn't quite sufficient.

Speed turned out to be a critical criterion to top the list of Google searches in April 2010. In other words, when every other aspect of your site is equal to that of your competitor, faster site will get a higher rank.

Drupal’s responsive web design further enriches the SEO as Google prefers mobile-first websites over those which are not mobile device friendly. devices also. In this setup, the same HTML code is sent to all devices by the server. CSS changes the visuals on the page for every mobile device. The same URL can be used for all the pages to share and drive traffic to your site.

You can use Google’s AMP to enhance the mobile responsiveness.

SEO is a game of inches. A title tag without a keyword or body or a metadata that is not well structured can do nothing to your site except for kicking you off the top search results of Google.

Some common SEO errors and respective solutions/best practices.

Broken Internal Links

The reason as to why you have broken links has to be figured out. In case the page has been relocated to another URL, please consider applying 301 redirects.

In case there happen to be external broken links, it might take longer than you think. You should contact the website owners who you linked to and figure why was the page hooked off.

The next step is to reallocate the broken external links by finding the alternatives which will be of the same value for your visitors.

Duplicate Content Issues

There can be multiple reasons for duplicate content, for example:

URL variants, HTTP vs. HTTPS or WWW and non-www pages or plagiarised content.

When it comes to URL variants, the best way to combat is to set up a 301 redirect from the old URL to the new one. Also, you can setup rel=canonical to the primary URL which tells search engines that the other identical URLs should be treated as duplicates of the primarily specified URL. Alongside, all of the links and metric records that search engines have been monitoring on these identical pages should actually be credited to the primary URL.

Crawl Issues

This issue indicates that crawler couldn’t access the webpage because the server either timed out or refused the connection before the crawler received any response. The best possible solution is to contact your hosting support team and look into the issue.

Incorrect URL Formats

As discussed earlier, you can set up automatic URL aliasing on your Drupal website to enable contextual URL patterns for every landing page.

Low text-to-HTML ratio

Your text to HTML ratio indicates the amount of actual text you have on your webpage compared to the amount of code. Search engines focus more on content laden pages. A higher text to HTML ratio means your page has the chance of securing better search results position.

Unnecessary code deteriorates your the page load time which keeps you from ranking well in the SERPs. Moreover, search engine robots would crawl your Drupal website faster.

What could be done is separating your webpage’s text content and code into separate files do a size comparison. You should consider reviewing your page’s HTML code and consider removing useless code, embedded scripts, and styles if the text-to-HTML ratio is lower.

Missing meta descriptions

Missing meta descriptions could prevent you from boosting click-through-rates as they are not able to be contextually appealing or do not provide a peek into the content.

Using the meta tag modules in Drupal should resolve this problem as it auto-generates a summary of the page and populates the meta description.

Broken internal images

There could be two reasons as to why an internal image isn't accessible anymore:

- It has a misspelled URL

- The file's path is currently invalid.

Broken images always have deterred search rankings of a web page because they resemble poor user experience which forces users to quit the page far before the ideal time one should spend on a page. Secondly, the signal to search engines that your page is low quality.

You should immediately replace all broken images with alternatives or delete them before the consequences get worse.

Robots.txt does not exist

Not having Robots.txt on your Drupal website is not crawling friendly. You can easily implement the robots.txt module as this file plays a crucial part in overall performance as well as search engines optimization.

Duplicate Title Tags

When your pages have duplicate title tags only if they are exactly the same. Duplicate <title> tags make it difficult for search engines to determine which of a website’s pages is relevant for a specific search query, and which one should be prioritized in search results.

Pages with duplicate titles have a lower chance of ranking well and are at risk of being banned. Moreover, identical <title> tags confuse users as to which webpage they should follow. You can fix this by implementing the meta tag module on your Drupal website or provide a unique and concise title for each of your pages.

4xx Error

In times of 4xx errors, the requested webpage cannot be accessed. This is usually the result of broken links. These errors prevent users and search engine robots from accessing your web pages. This will bring a dramatic drop in your usual traffic. Crawlers may also categorize a working link as broken if your website blocks the crawler from accessing it.

This following may be the reasons:

- DDoS protection system.

- Overloaded or misconfigured server.

- Disallowed entries in your robots.txt.

You can manually check all 4xx error links and see if the webpage returns an error, removing the link leading to the error page or replacing it with an alternative resource would make sense.

If the links reported as 4xx do work when accessed with a browser, you should instruct the search engine robots not to crawl your website too frequently by specifying the crawl-delay directive in your robots.txt

Uncompressed Pages

This issue is triggered if the Content-Encoding entity is not present in the response header of your Drupal website. Having compressed pages is necessary to the process of optimizing your website. Using uncompressed pages leads to a slower page load time, resulting in a poor user experience and a lower search engine ranking.

You should make sure the elements of your web page such as images, videos, graphics do not exceed the standard size. Also, enable CSS and JS aggregation on your Drupal website.

External links contain Nofollow attributes

Nofollow links are known to not pass any link juice or anchor texts to the specifically referred webpages. A nofollow attribute is an element in an <a> tag which tells crawlers not to follow the link.

You might have mistakenly used nofollow attributes which may negatively impact the crawling process and ultimately your rankings.

You should keep a check that you don't include nofollow attributes by mistake. Also, removing them from the <a> tags shall help this issue further.

No redirect to HTTPS homepage from HTTP version

This may result in losing the usual traffic as your visitors would be taken to an error page instead. Redirecting your HTTP version of the website to HTTPS will ensure the page requests are being fulfilled, ultimately preventing a terrible user experience.

Don't have an h1 heading

For pages which don’t have an H1 heading, they are not being given the position they might deserve in the search results. As told earlier, page hierarchy, which includes headings, has a fair hand in Drupal SEO.

Sitemap XML not found

A sitemap.xml file enables the website to be fully crawled as per hierarchy. It can also include additional data about each URL. It provides easier navigation and better visibility to search engines, it also quickly informs search engines about any new or updated content on your website.

Generate a sitemap.xml file by implementing the Sitemap module on your Drupal website. This is a far better approach to building it up manually would consume too much of time.

Thin content

To eliminate the thin content issue, as it proves to be poor for search engine optimization. You should make sure every landing page has 200 (bare minimum) meaningful words.

The remainder

Drupal is an amazing CMS for search engine optimization, and using the right SEO modules will push you up the search engine ladder.

At OpenSense Labs we pride ourselves on educating our customers, developing brilliant websites and giving back to the Drupal community. Building SEO-friendly websites is our niche with which we aim to satisfy our customers and theirs. Hopefully, this article will set you on the right path to a high-quality website, but if you need more information or a quote, please feel free to get in touch with us at [email protected]

Subscribe

Related Blogs

Drupal's Role as an MCP Server: A Practical Guide for Developers

"The MCP provides a universal open standard that allows AI models to access real-world data sources securely without custom…

What’s New in Drupal CMS 2.0: A Complete Overview

"Drupal CMS 2.0 marks a significant change in the construction of Drupal websites, integrating visual site building, AI…

Drupal AI Ecosystem Part 6: ECA Module & Its Integration with AI

Modern Drupal sites demand automation, consistency, and predictable workflows. With Drupal’s ECA module, these capabilities…