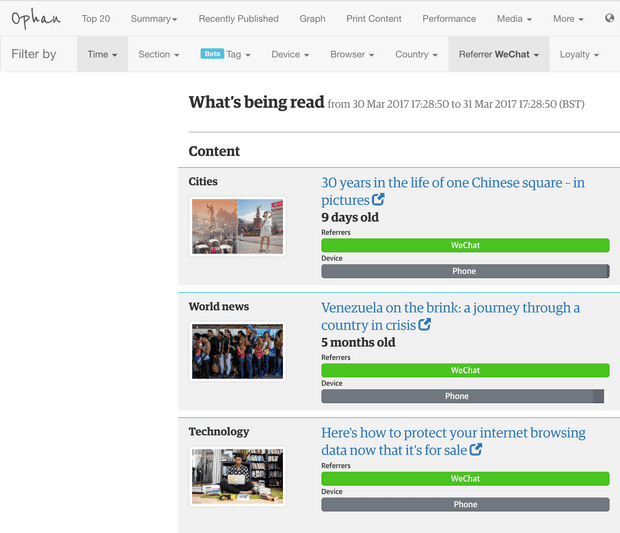

Elasticsearch has been meritorious for The Guardian, one of the most reputed news media, by giving them the freedom to build a stupendous analytics system in-house rather than depending on a generic, off-the-shelf analytics solution. Their traditional analytics package was horrendous and was extremely sluggish consuming an enormous amount of time. The Elasticsearch-powered solution has turned out to be an enterprise-wide analytics tool and helped them understand how their content is being consumed.

Why is such a large organisation like The Guardian choosing Elasticsearch for its business workflow? Elasticsearch is all about full-text search, structured search, analytics, intricacies of confronting with human language, geolocation and relationships. Drupal, one of the leading content management frameworks, is a magnificent solution for empowering digital innovation and can help in implementing elastic search. Before we look at Drupal’s capability in implementing elastic search ecosystem, let’s unwrap Elasticsearch first.

Unlocking Elasticsearch

Elasticsearch is an open source, broadly distributable, RESTful search and analytics engine which is built on Apache Lucene. It can be accessed through an extensive and elaborate API. It enables incredibly fast searches for supporting your data discovery applications. It is used for log analytics, full-text search, security intelligence, business analytics and operational applications.

Elasticsearch is a distributed, scalable, real-time search and analytics engine - Elastic.io

It enables you to store, search and assess the voluminous amount of data swiftly and in near real-time. In general, it is leveraged as the underlying engine/technology for powering applications that have sophisticated search features and requirements.

How does Elasticsearch work? With the help of API or ingestion tools like Logstash, data is sent to Elasticsearch in the form of JSON documents. The original document is automatically stored by Elasticsearch and a searchable reference is added to the document in the cluster’s index. Elasticsearch API can, then, be utilised for searching and retrieving the document. Kibana, an open-source visualisation tool, can also be leveraged with Elasticsearch for visualising the data and create interactive dashboards.

Elasticsearch is often debated alongside Apache Solr. There is even a dedicated website called solr-vs-elasticsearch that compares both of them on various metrics. Both the solutions accompany itself with support for integration with open source content management system like Drupal. It depends upon your organisation’s needs to select from either one of them.

For instance, if your team comprises a superabundance of Java programmers, or you already are using ZooKeeper and Java in your stack, you can opt for Apache Solr. On the contrary, if your team includes PHP/Ruby/Python/full stack programmers or you already are using Kibana/ELK stack (Elasticsearch, Logstash, Kibana) for handling logs, you can choose Elasticsearch.

Merits of Elasticsearch

Following are some of the benefits of Elasticsearch:

- Speed: Elasticsearch helps in leveraging and accessing all the data at a fast clip. Also, it makes it simple to rapidly build applications for multiple use cases.

- Performance: Being highly distributable, it allows the processing of a colossal amount of data in parallel and swiftly finds the best matches for your search queries.

- Scalability: Elasticsearch offers provision for easily operating at any scale without comprising on power and performance. It allows you to move from prototype to production boundlessly. It scales horizontally for governing multiple events per second while simultaneously handling the distribution of indices and queries across the cluster for efficacious operations.

- Integration: It comes integrated with visualisation tool Kibana. It also offers integration with Beats and Logstash for streamlining the process of transforming source data and loading it into Elasticsearch cluster.

- Safety: It detects failures for keeping the cluster and the data safe and available. With cross-cluster replication, a secondary cluster can be leveraged as a hot backup.

- Real-time operations: Elasticsearch operations like reading or writing data is usually performed in less than a second.

- Flexibility: It can pliably handle application search, security analytics, metrics, logging among others.

- Simple application development: It offers support for numerous programming languages comprising of Java, Python, PHP, JavaScript, Node.js, Ruby among others.

Elasticsearch with Drupal

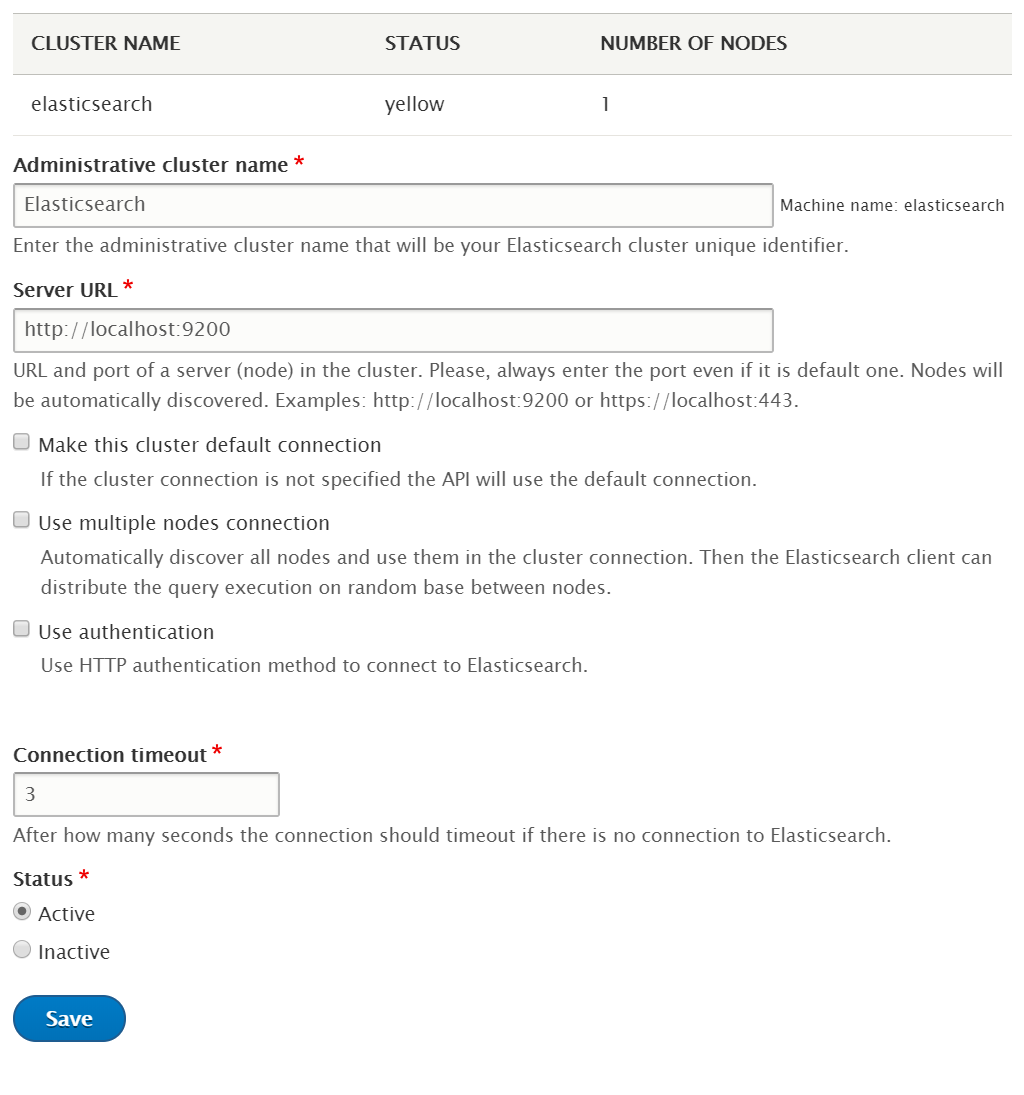

For designing a full Elasticsearch ecosystem in Drupal, Elasticsearch Connector, which is a set of modules, can be utilised. It leverages the official Elasticsearch PHP library and was built with the objective of handling large sets of data at scale. It is worth noting that this module is not covered by security advisory policy.

Elasticsearch Connector module can be utilised with a Drupal 8 installation and configured so that Elasticsearch receives the content changes

Elasticsearch Connector module can be utilised with a Drupal 8 installation and configured so that Elasticsearch receives the content changes. At first, you need to download a stable release of Elasticsearch and start it. You can, then, move ahead and set up Search API. This is followed by the process of connecting Drupal to Elasticsearch with the help of Elasticsearch Connector module which involves the creation of cluster or the collection of node servers where all the data will get stored or indexed.

This is succeeded by the configuration of Search API. It offers an abstraction layer to allow Drupal to push content alterations to different servers such as Elasticsearch, Apache Solr, or any other provider that has a Search API compatible module. The indexes are created in each of those servers with the help of Search API. These indexes are like buckets where the data can be pushed and can be searched in different ways. Subsequently, indexing of content and processing of data is done.

Case Study

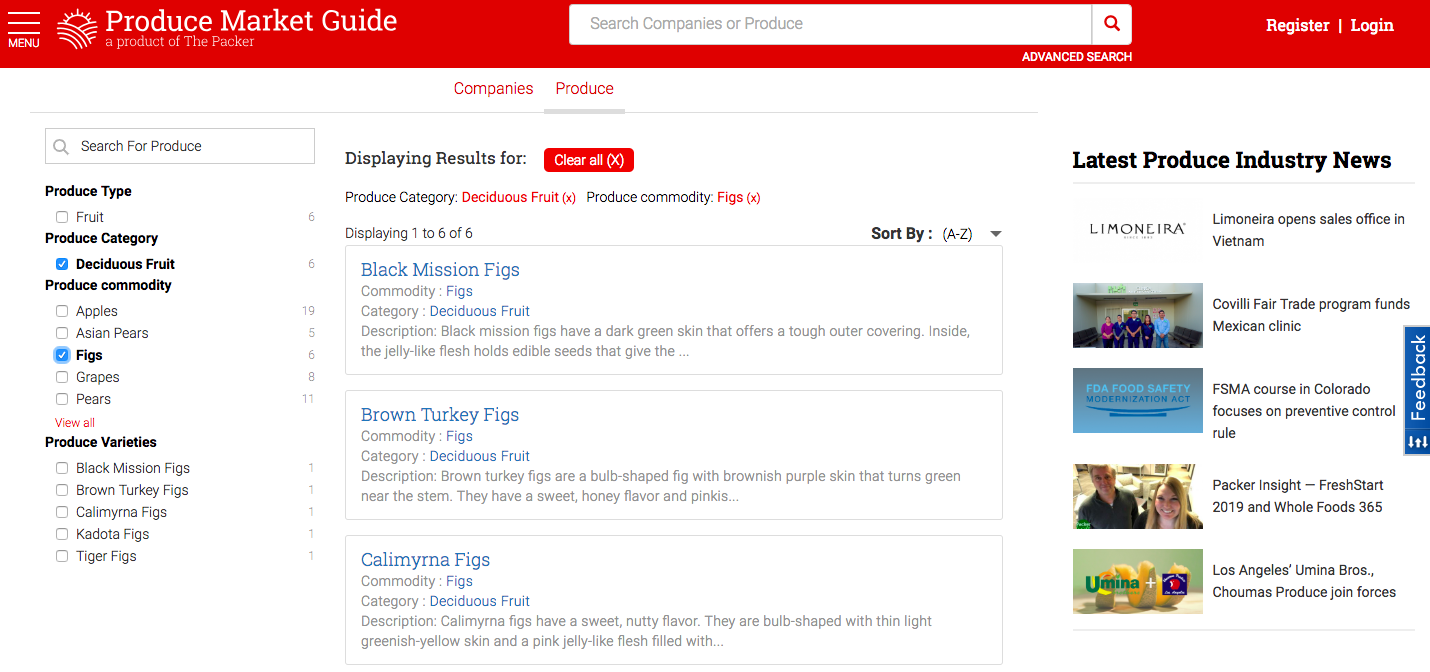

The website of Produce Market Guide (PMG), a resource for produce commodity information, fresh trends and data analysis, was rebuilt by OpenSense Labs. Interpolation of a JavaScript framework into the Drupal front end using progressively decoupled Drupal helps in creating a balance between the workflows of developers and content editors.

We rebuilt the website of PMG using progressively decoupled Drupal, React and Elasticsearch Connector module among others.

To do the mapping and indexing on Elastic Server, ElasticSearch Connector and Search API modules were leveraged. The development of Elastic backend architecture was followed by the building process of the faceted search application with React and the incorporation of the app in Drupal as block or template page.

The project structure for the search was designed and developed in the sandbox with modern tools like Babel and Webpack and third-party libraries like Searchkit. Searchkit is a suite of React components that interact directly with your ElasticSearch cluster where every component is built using React and can be customised as per your needs. Searchkit was of immense help in this project.

Logstash and Kibana, which are based on Elasticsearch, were integrated on the Elastic Server. This helped in collecting, parsing, storing and visualising the data. The app in the Sandbox was built for the production and all the CSS/JS was integrated inside the Drupal as a block thereby making it a progressively decoupled feature.

Following the principles of Agile and Scrum, it resulted in a user-friendly website for PMG with a search application and loaded the search results faster.

Conclusion

The world is floating over a cornucopia of data. There is simply no end to the growth in the amount of data that is flowing through and produced by our systems. Existing technology has laid emphasis on how to store and structure warehouses replete with data.

But when it comes to making decisions in real time informed by that data, you need something like an Elasticsearch for searching and analysing data in real-time. Drupal can be a wonderful solution for implementing Elasticsearch ecosystem with its suite of modules.

We have been steadfast in our goals of empowering digital innovation with our suite of services.

Contact us at [email protected] to reap the rewards of Elasticsearch and ingrain your digital presence with advanced search capabilities.

Subscribe

Related Blogs

Drupal's Role as an MCP Server: A Practical Guide for Developers

"The MCP provides a universal open standard that allows AI models to access real-world data sources securely without custom…

What’s New in Drupal CMS 2.0: A Complete Overview

"Drupal CMS 2.0 marks a significant change in the construction of Drupal websites, integrating visual site building, AI…

Drupal AI Ecosystem Part 6: ECA Module & Its Integration with AI

Modern Drupal sites demand automation, consistency, and predictable workflows. With Drupal’s ECA module, these capabilities…