Kubernetes is acquiring the cloud space at a rapid pace and is becoming the operating system for the cloud. The extensiveness of Kubernetes has brought many big benefits for the developers and the organizations. The year 2017 has seen the inception of many native Kubernetes service providers and the restoration of many other container orchestration platforms forming a solid foundation for the cloud service providers. The extensive rise in the manifestation of Kubernetes assures the future is very bright.

Let us delve in, to know why Kubernetes is trending and what is its quality that acquires the interests of the people.

What is Kubernetes?

Coined by Google in 2014, Kubernetes is an open-source project whose central point is to build a robust arrangement of systems to run thousands of containers in production.

The term was conceived from a Greek word ‘κυβερνήτης’ meaning ‘helmsman’ or ‘pilot’ with an initial release in the year 2014. Google being the original author made the Kubernetes project open source.

Compounding the industry-leading ideas and approach from the Google community, Kubernetes can be defined as a portable and flexible platform for the management of containerized workloads and services. It enables both declarative configuration, scaling, and automation of the containerized applications. It does the logical grouping of the containers for smooth management and discovery. The complete regime of Kubernetes consists of tools, services, and support systems, which are extensively available.

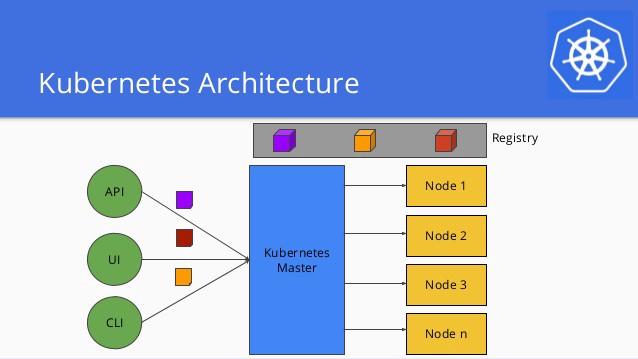

Kubernetes Architecture

Similar to many distributed computing platforms, the following are the components of a Kubernetes cluster.

The Master Node (at least one) manages the overall Kubernetes cluster, exhibits the application programming interface(API) and schedules the overall deployment.

Nodes (Worker Node) comprise virtual machines(VMs) running over the cloud or exposed metal servers running within a data center. It along with an agent connects with the master and runs the container runtime(Docker or rkt). Furthermore, logging, monitoring, service discovery and optional add-ons are the few additional integrals of the node. Nodes being the workers of the Kubernetes cluster compute, network and store resources to the applications.

Every container is different from every other container. For example, one can be a web app and the other may be used for consistent data storage. Kubernetes simplifies the process to run a container and parallelly eases the process of running different kinds of containers alongside. The capability of containers to understand the fact that running a system is not about running a container highlights it from the other orchestration systems.

Is it even possible to design an application with many moving parts and also keeping the orchestration and deployment smooth? How to determine the uniform distribution of a load? How the storage with multiple instances can be kept consistent? are the few regular questions that have been addressed by Kubernetes.

Traditional deployment to Kubernetes - Evolution

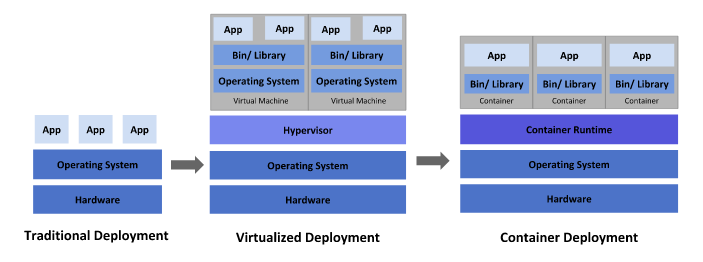

Traditional Deployment Cycle

The traditional deployment era comprised of the applications that ran on physical servers. As there was no process to allocate resources it caused many resource allocation issues. There had been many instances wherein one application took most of the resources leading to the underperformance of the other applications.

Running applications on the different physical servers have been recommended as the solution for the aforementioned issue. This led to the underutilization of the resources making it expensive for the organizations to maintain the physical servers.

Virtualized Deployment Cycle

With the purpose to provide the solution for the issues that occurred with the traditional physical servers, virtualization concept was introduced. A virtual machine is a complete system encompassing its own operating system, on top of the virtualized hardware. Virtualization brings better utilization of the resources in physical servers.

In virtualization, applications are isolated between the virtual machines keeping them secure. This prevents an application's information to be easily accessed by the other application. Also, an application can be easily added or updated, reduces the costs of the hardware.

Container Deployment Cycle

Not very much different from virtual machines, isolation in containers is a bit relaxed as applications share operating systems. Containers are light-weight, consists of its own file system, CPU, memory, etc. Also, can be easily ported across the cloud and OS distributions. Continuous integration and deployment, creation and deployment of agile applications, high-efficiency, and density due to resource utilization are the few benefits acquired by the organizations by transforming into a container deployment cycle.

Reasons why Kubernetes is trending

The following reasons explain why people are choosing Kubernetes over everything.

- Instrumenting storage: Kubernetes automates a user to pick up a storage system from local storage, public cloud providers to many other more.

- Load Balance and service discovery: If the container is high on traffic, Kubernetes balances the load and distributes the network traffic for the stable deployment of the application. In addition to that, Kubernetes uncovers a container using a DNS name or using their own IP address.

- Appropriate resource management: CPU and RAM for the containers can be stipulated with the help of Kubernetes. When particular resource requests are received, Kubernetes creates better allocation and management of the resources for containers.

- Automated distribution and rollbacks: When the desired state for a deployed container is described using Kubernetes, it modifies from actual to the desired state at a very controlled rate. For example, the replacement of old containers with the new one can be automated with Kubernetes.

- Self Restoration: Whenever a container fails, Kubernetes restarts it, replaces the container, kills that does not respond to the user-specific health check and does not promote them to clients until they are ready to serve.

- Secret and Configuration Management: Sensitive information like passwords, OAuth tokens, and ssh keys are readily stored and managed by Kubernetes. Deployment and updates of secrets and application configuration can be done without rebuilding your container images and without exposing secrets of stack configuration.

Changing the development and infrastructure landscape with Kubernetes

Kubernetes handling Systems

As already stated, Kubernetes consists of two types of nodes, namely, master and a worker. Below bullets cover the working model along with the other subparts of Kubernetes in systems.

- Pod: Collection of containers or a work component that is deployed to the same host is how pods are defined. Pods ease the process of service search with Kubernetes service discovery as they consist of their own IP addresses. Single or tightly coupled several containers or services run inside the same pod. Kubernetes connects and manages the pod into the application environment like winding the deployments, monitoring, etc.

- Replication controller: The maintenance of the pods requested by a user is done through a replication controller. It ensures easy scaling of the application. The process follows as, if a container drops, the replication container will start another container assuring the ample number of replica pods availability.

- Service: An individual entity that is formed from the grouping of logical collections of pods to perform the same function. Nodes of a cluster get notified on the creation of a new service. The distribution of services streamlines the container design ensuring easy container discovery. Single point accessibility makes communication between the collection of pods effortless.

- Label: Labels are essential for the proper functioning of services and replication controllers. These are the metadata tags for the easy search of Kubernetes resources is done through a label. Considering the host of Kubernetes relies on querying the clusters for searching resources, a query-based search because of labels makes it effortless for the developers.

- Volume: The place where the containers access and store the information supported by Ceph, local storage, elastic block storage, etc is defined as a volume. For an application, the volume is a section of a local file system.

- Namespace: Clustering inside a Kubernetes is done through namespaces. The collaboration of services, pods, replication controllers and volumes is easily done under a namespace. Moreover, it offers a little isolation from other parts of the container.

Development on and with Kubernetes

With a goal to assure a better experience for developers, the Kubernetes team and community crisply work to strengthen the community engagement and proper documentation for a novice. The spirit is to make learning and adoption of Kubernetes streamlined and painless. Below bullets supports how actually Kubernetes rationalizes the work for the developers.

Minikube, a virtual machine that runs Docker and Kubernetes, is used to create small self-contained clusters on local machines (macOS, Linux, Windows). Deployment in Minikube’s can be done like any other Kubernetes. Minkube’s with local Kubernetes being on top of Docker imitates the deployment and production workflow closer than ever. Docker images that are designed to run on an environment can be easily run locally with a Minikube, with no amendment. Tools like Draft from Azure resolve a lot many challenges from a system locally. Thus, a complete local development inter-network gets formed with this. Kubernetes has now been added by Docker in its pack.

Once the whole deployment set up is comprehended, now comes the application of continuous delivery to the Kubernetes workflow keeping intact the unaltered releases, no breakage, and increased reusability and similarity maintenance in between the environments. Helm is a tool to manage Kubernetes charts and is a repository for the pre-configured Kubernetes resources. It can be used to express Kubernetes as a reusable template code across environments by performing the variable changes at the time of deployment. Helm charts are also preferred for several common open-source applications, like, Grafana, InfluxDB, Concourse, etc.

Kubernetes & Cloud Infrastructure Ecosystem

In a cloud infrastructure environment, Kubernetes works as an irresolute layer between the cloud platform and the applications. This associates clusters across multiple cloud providers promising running of Kubernetes on bare metal servers and enhanced approachability. Kubernetes enables both compact integration and independence for the cloud providers. For example, let us say an application requires a Load Balancer. Advancing with a conventional approach, at the very first the application is built and packaged. Later, with Terraform templates or CloudFormation deployment environment requesting for an AWS Elastic Load Balancer is described.

When proceeding with Kubernetes, the Load Balancer is specified in its manifest but not necessarily the AWS Elastic Load Balancer. Kubernetes renders the request for a load balancer on the basis of the cloud provider in which the cluster is deployed. Therefore, keeping the deployment configuration integral, when a cluster is in AWS than an AWS Elastic load balancer is supplied and connected automatically to the cloud. On the contrary, the GCP load balancer is provisioned for the Google Cloud. A similar pattern is followed for external DNS records, durable and stable storage volumes.

Moving ahead with Cloud, Kubeless and Fission are the tools that are seen running within a serverless world working similar to functions-as-a-service, indicating the strong foundation of Kubernetes in the serverless world. Where are we heading to, Is the future Serverless?

Conclusion

Kubernetes is rapidly becoming the de-facto orchestration tool for declarative managing open infrastructure and is still growing at a rapid pace. Containers are spreading their wings into the software development arena. Kubernetes bringing the power of the infrastructure by keeping all the moving parts and dependencies will leverage the continuous growth across various infrastructures keeping them less complex.

The next few years is definitely going to see a lot more advancements in the Kubernetes and we’ll see a lot more exciting technologies built over Kubernetes enabling enterprises to capitalize on many major benefits from it. The future is bright indeed.

Subscribe

Related Blogs

Why should you prioritize lean digital in your company?

We are living in an era where the change and innovation rate is just so high. If you want your organization to reach new…

How to measure your open source program’s success?

Along with active participation, it is very important to look after the ROI of open-source projects, programs, and…

Understanding the significance of participating in open-source communities

Do you think contributing to the open source community can be difficult? I don’t think so. Do you have to be employed by a…