GenAI vs LLM: What’s the Real Difference?

AI-TranslatedIn recent years, artificial intelligence has gained immense popularity, particularly on social media. Picture this: You're browsing Reddit, scrolling reels on Instagram, checking out posts on X (formerly known as Twitter), or chatting with your colleagues, and terms like "GenAI vs LLM" and "machine learning" keep popping up.

You agree with the discussion, but deep down, you could be questioning - what do these words really signify?

And why does it feel like everyone is either thrilled or anxious about them?

Consequently, it appears that many people are using these "AI terms" interchangeably, as though they all signify the same concept.

However, what do these terms truly signify, and what is their relationship?

In GenAI vs LLM, Generative AI includes different types of creative AI, such as text, images, and music. In contrast, LLMs are specifically centered around language. This means that while every LLM is a form of generative AI, not every type of generative AI falls under the category of LLMs.

For example, GPT-4o is recognized as both a large language model (LLM) and a generative AI (GenAI) model. Conversely, Midjourney, which solely creates images, is classified as merely a GenAI model.

In this blog, we will delve into the basics of GenAI Vs LLM, discussing what they are, how they function, when to utilize each, and the practical examples that distinguish them.

And if you're looking for responsible AI services like AI-driven search and personalization, check out the responsible AI offerings from OpenSense Labs before proceeding.

Now, let's begin with the basics!

GenAI vs LLM: Understanding the Basics

The initial idea to grasp about GenAI vs LLM is that they are neither opposites nor synonyms, nor are they strictly subsets of each other. They represent different types of AI models that evolved from distinct research paths.

Now, let’s explore the differences between GenAI vs LLM in more detail.

Large Language Models (LLMs) are advanced deep learning systems that use transformer-based neural networks. They are trained on large text datasets collected from books, websites, forums, and other written materials. Models like GPT-4o are built to understand language patterns and the connections between words, enabling them to generate clear and contextually relevant responses.

Through this training, LLMs gain the ability to understand questions, summarize information, translate languages, and perform complex reasoning tasks on their own.

The training process emphasizes grasping the connections between words, which are converted into numerical forms known as embeddings. For example, GPT-4 utilizes more than a trillion parameters and applies self-attention techniques to improve precision and contextual comprehension.

On the other hand, Generative AI (GenAI) represents a broader range of AI models that can generate new content by recognizing and learning from patterns in their training data. Rather than just predicting outcomes, GenAI creates new outputs based on the patterns it has absorbed, enabling it to produce text, images, audio, and videos that closely resemble content created by humans.

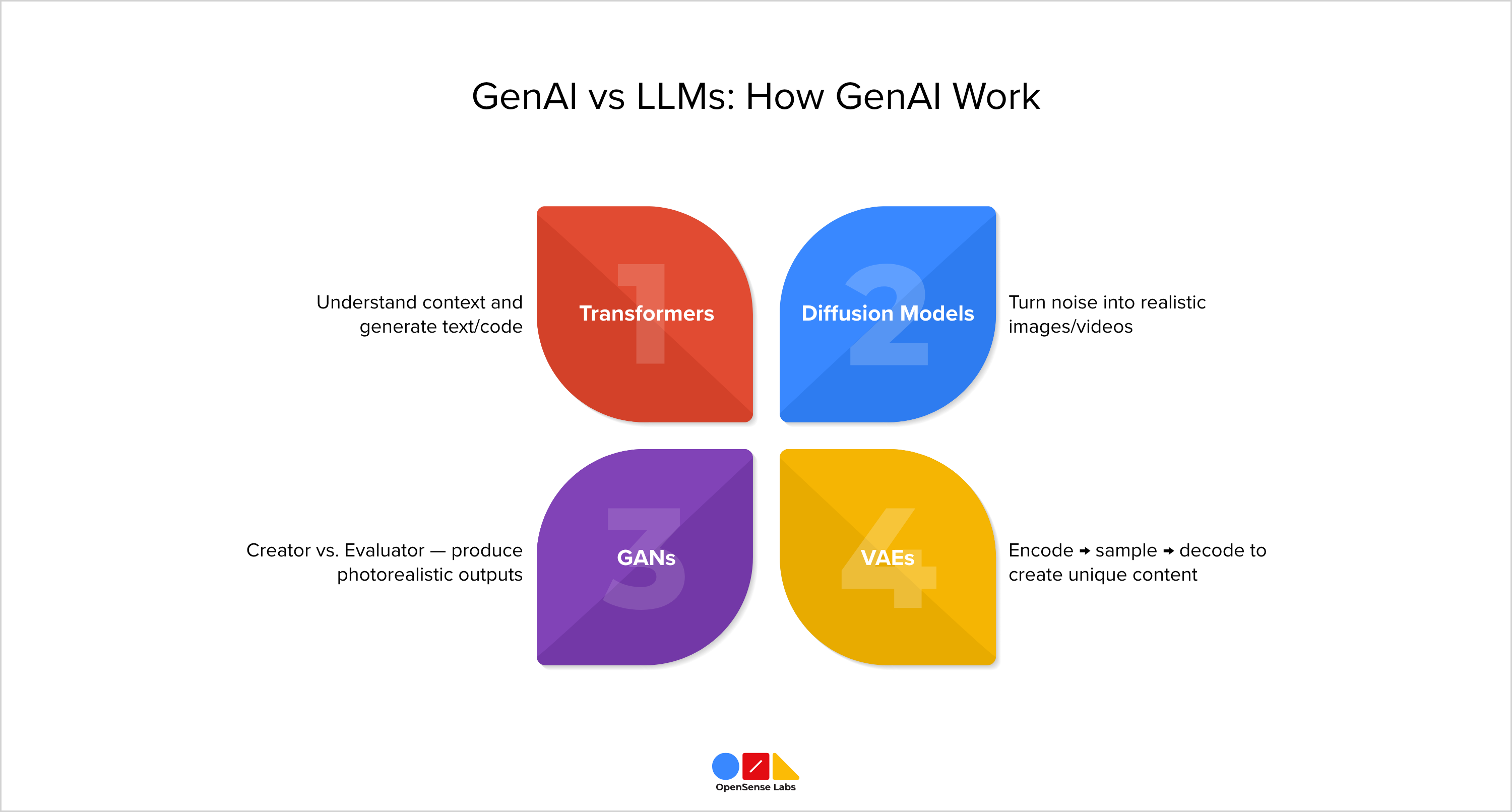

While LLMs focus on text in GenAI, other models, such as Diffusion, GANs, and VAEs, are responsible for creating images, videos, and audio. Various architectures drive Generative AI.

Large Language Models are one example; they generate original text using transformer architectures, classifying them as a certain type of GenAI. However, in fields outside of text, different architectures tend to be more efficient.

One of the most commonly used models is the Generative Adversarial Network (GAN). This framework consists of two opposing networks: a generator that creates content and a discriminator that evaluates the authenticity of that content. The generator's goal is to produce outputs that the discriminator cannot tell apart from real data, thereby narrowing the difference between content created by humans and that generated by machines.

This architecture has facilitated numerous advancements in GenAI, especially in the realms of image and video generation, leading to innovations such as deepfakes, image enhancement, and creative design tools.

Also Check Out

- Transform Your Website with Drupal AI Module in 2025

- Ethical AI Chatbot: Implementing the RAIL Framework at OSL

- Agentic AI Dynamics: Future of Data Retrieval

- Explainable AI Tools: SHAP's power in AI

GenAI vs LLM: How GenAI Works

At the core of Generative AI are models designed to understand data structures and produce outputs that resemble human creativity. This technology powers applications such as ChatGPT, DALL·E, Sora, and RunwayML, fundamentally changing our methods of creativity, automation, and tackling challenges. Here is how GenAI works:

Transformers

Transformers are advanced deep learning models that utilize a self-attention mechanism to grasp context and relationships in data. They drive contemporary large language models (LLMs) such as GPT (OpenAI), Claude (Anthropic), and LLaMA (Meta), and they excel in various tasks, including text generation, translation, summarization, code generation, and creating embeddings.

How it works:

- Converting Words into Numerical Vectors: Words are first converted into numerical vectors so that the model can interpret their meaning and position.

- Analyzing All Words Simultaneously: Transformers look at all words together instead of one by one. For instance, in the sentence "The cat chased the mouse because it was hungry," the model understands that "it" means "the cat."

- Determining the Most Significant Words: The self-attention mechanism assigns importance scores to words, helping the model decide which one matters most for understanding or generating a response.

- Transmitting Information Through Multiple Layers: Information passes through multiple layers, each refining the model’s grasp of meaning and context.

- Producing Output Incrementally: As the model creates text, it generates one word at a time, using all the words it has already produced to choose the next one. This ensures that the output is coherent and relevant to the context.

Diffusion models

Diffusion models are a kind of generative AI that creates images, videos, and audio by gradually changing random noise into realistic content. They learn to reverse the noise process step by step, improving details to produce lifelike results. Some well-known examples are DALL·E 2, Imagen, Stable Diffusion, and Sora, which are used for tasks like image generation, inpainting, and image-to-image translation.

How Diffusion Models Function:

- Begin with Authentic Images: The model learns by adding random noise to actual images until they become totally static, helping to understand how clear images turn into noise.

- Master the Reverse Technique: Next, it is trained to do the opposite, methodically removing noise. Through the practice of many examples, one learns to recover clear images from their noisy versions.

- Create from Random Noise: When creating new content, the model begins with random noise, not requiring a reference image, and starts to imagine from scratch.

- Step-by-Step Denoising: Through many stages, it refines the image, changing static into shapes, then textures, and finally detailed visuals, similar to watching a photo slowly emerge in a darkroom.

Generative Adversarial Networks

Generative Adversarial Networks (GANs) are AI systems made to generate lifelike images. They include two neural networks: a generator that creates content and a discriminator that evaluates it, both of which compete against each other. This rivalry allows the model to produce impressively realistic results.

How GANs Function:

- A GAN is made up of two neural networks: one for the creator and one for the evaluator. Think of it like an artist versus a detective; the artist creates forgeries, while the detective tries to find them.

- The Creator starts with random values (noise) and attempts to transform them into lifelike images. At first, the results are chaotic blobs, but it gradually learns and improves.

- The Evaluator compares real images to generated ones to see if they are genuine. As the Creator improves its abilities, the Evaluator finds it harder to spot fakes.

- Growth Through Rivalry, the Creator and Evaluator compete, with the Creator improving realism and the Evaluator sharpening its judgment.

- In the end, the Creator becomes so skilled that its results look just like real images. At this point, the Creator can create photorealistic content on its own from the beginning.

Variational Autoencoders

Variational Autoencoders (VAEs) are generative AI models that learn to encode data into a compact latent space and then decode it to generate new content. They are used for image and speech generation, data compression, and exploring latent representations. Key examples include VQ-VAE and Beta-VAE.

How it works:

- Input Encoding: The VAE takes an image and compresses it into a more compact form using an encoder.

- Creating a Possibility Cloud: Instead of just one vector, it produces a distribution, a ‘cloud’ of values, which adds controlled randomness for more flexibility.

- Sampling from the Cloud: A point is selected at random from this distribution and sent to the decoder, which aids the model in generalizing.

- Decoding and Reconstruction: The decoder reconstructs the original image from the sampled code, learning to turn vague ideas into clear outcomes.

- Generating New Content: After training, sampling from the latent space produces entirely new images that are like the original data but still unique.

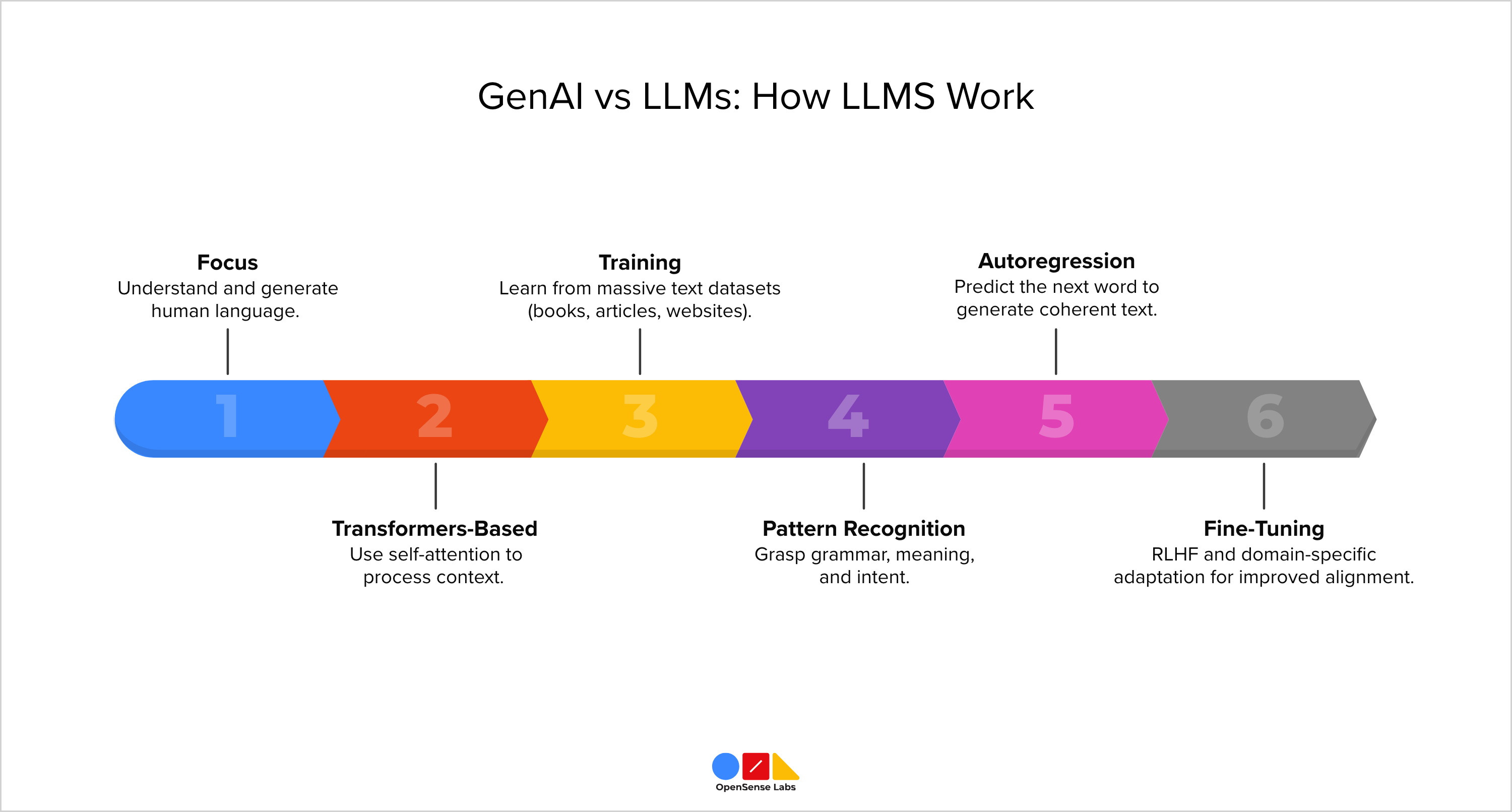

GenAI vs LLM: How LLMs Work

While GenAI can generate any type of content, LLMs focus exclusively on language understanding and generation.

Large Language Models (LLMs) are advanced AI systems designed to understand and generate text that mimics human writing. They are mainly built on Transformer architectures and power applications like GPT (OpenAI), Claude (Anthropic), Gemini (Google), and LLaMA (Meta). Their strength comes from processing large volumes of text data to perform tasks like writing, summarizing, translating, and reasoning.

Similar to Transformers, LLMs use self-attention to handle context, but they focus solely on comprehending and producing human language.

How LLMs Function:

- Data Gathering and Tokenization: Large Language Models (LLMs) are trained using extensive text datasets gathered from books, articles, websites, and forums. This text is broken down into smaller parts called tokens (which can be words or sub-words) that the model can process numerically.

- Understanding Language Patterns: While training, the model learns how words relate to each other, covering context, grammar, meaning, and intent. Using self-attention, it determines which words are important for correctly understanding or predicting the next word.

- Accumulating Knowledge Through Parameters: Each connection and learned pattern is saved as a parameter. The bigger the model (like GPT-4 with more than a trillion parameters), the deeper its understanding and reasoning skills grow.

- Anticipating the Next Token: When creating text, the model forecasts the next token based on all preceding ones word by word to maintain coherence and context. This technique, known as autoregression, enables it to construct complete, meaningful sentences.

- Fine-Tuning and Ongoing Adaptation: After pretraining, LLMs are fine-tuned using Reinforcement Learning from Human Feedback (RLHF) to enhance alignment, and they are subsequently tailored for particular fields such as coding or healthcare.

Integrating generative AI and LLMs into your workflow

If you are at this stage, you are doing well in grasping AI. Now you may be curious about how to effectively integrate AI into your job or everyday life.

Here are some actionable steps to begin:

- Create captivating content: Platforms like ChatGPT or Claude can help brainstorm ideas, draft blogs, or outline thoughts. Tools like Obsidian or Pieces plugins maintain long-term context while you write.

- Use developer tools smartly: Tools for generating code, such as GitHub Copilot and Pieces, work with VS Code or Chrome to speed up your workflow.

- Generate visuals efficiently: Tools such as DALL·E, Midjourney, and Canva allow you to create or enhance images for presentations or social media posts.

- Build interactive chatbots: APIs from OpenAI or Anthropic let you embed AI assistants into apps or sites.

Hopefully, you now feel inspired to incorporate AI into your workflow. In truth, whatever you need help with regarding AI, there’s probably a solution out there for you (and if there isn’t, you can always make it yourself).

Successful Examples of GenAI and LLM Applications

Generative AI tools can create diverse media, videos, images, and audio, while LLMs specialize in text, making them ideal for chatbots, assistants, and writing tools.

Based on your project requirements, you can use them separately or merge their advantages for wider uses.

Before we go on, if you want reliable AI services such as AI-driven insights and integration, take a look at the responsible AI options from OpenSense Labs.

Now, let's continue with our understanding!

GenAI vs LLM: Why Do Multi-Agent LLM Systems Fail?

However, as LLMs evolve into multi-agent systems, they face new coordination and reliability challenges.

The MAST taxonomy was created by manually annotating more than 200 execution traces, with each trace averaging over 15,000 tokens.

This method found 14 types of failures in three main categories:

- Specification Issues (41.8%): Poor prompt design, missing constraints, or weak termination criteria.

- Inter-Agent Misalignment (36.9%): Misunderstandings, differing beliefs, or lack of context.

- Task Verification Failures (21.3%): Insufficient validation or hasty conclusions.

These overlapping issues highlight the need for structured diagnostics instead of ad-hoc inspections.

Also Check Out

- UX Best Practices for Website Integrations

- Gin Admin Theme: Replacing Claro In Drupal CMS

- SDC: Integrating Storybook & Single Directory Component

- Create and Integrate: CKEditor 5 Plugin in Drupal 11

Key Takeaways

- GenAI vs LLMs: LLMs are a subset of Gen AI focused on text, while GenAI covers a wider range, generating images, videos, audio, and more.

- LLMs are advanced deep learning systems that leverage transformer-based neural networks and are trained on vast text datasets.

- As LLMs evolve into multi-agent systems, the MAST taxonomy reveals 14 failure modes across design, alignment, and verification, emphasizing the need for structured diagnostics over ad-hoc fixes.

- Generative AI (GenAI) is a type of AI that can produce new content by identifying and learning from patterns in the data it was trained on.

- GenAI drives ChatGPT, DALL·E, Sora, and RunwayML, transforming how we approach creativity, automation, and problem-solving.

Abonnieren

Verwandte Blogs

Ethischer KI-Chatbot: Implementierung des RAIL-Frameworks bei OSL

Im heutigen digitalen Zeitalter transformieren ethische KI-Chatbots Branchen, vom Kundenservice über das Gesundheitswesen…

Agentic AI Dynamics: Die Zukunft der Datenabfrage

In einer Welt, in der die Datenmengen stetig wachsen, kann es sich anfühlen, wie die Suche nach der Nadel im Heuhaufen, die…